Micron: A No-Brainer AI Bet

AI-fueled growth, U.S. manufacturing, dividends, and share repurchases make Micron the ideal low-risk, high-return AI play

When people mention AI, they instantly think of LLMs like ChatGPT or Claude, or about the chips from NVIDIA and AMD.

They may also realize the opportunity in chip manufacturers like TSMC. However, what everyone seems to miss is the need for memory.

The rise of AI has increased computational needs to levels never seen before, and the growth is exponential. Now, the largest bottleneck for increasing AI accelerator production isn’t foundry capacity or raw materials. It’s HBM.

HBM stands for High Bandwidth Memory, a type of ultra-fast memory incorporated in every AI accelerator. Without it, even the most advanced GPUs are bottlenecked. This memory has inflated massive growth into the memory producers, and they’re overwhelmed with demand. This, of course, presents an opportunity for avid investors who want to take advantage of the massive opportunity that AI presents.

Micron is quickly becoming a memory powerhouse

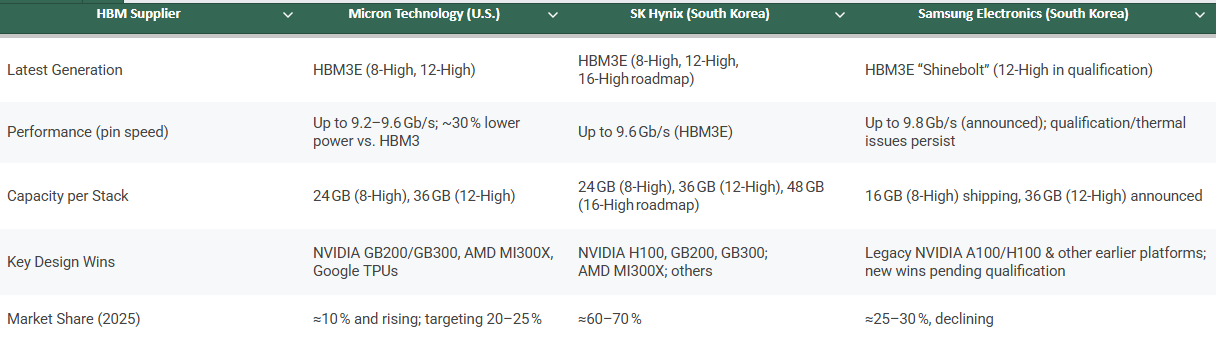

The HBM market is an oligopoly, composed of only three players: Samsung, SK Hynix, and Micron. Samsung, who used to be the giant in the memory space, has seen its dominant position weaken. Internal inefficiencies, nepotism, and bad management have made them lose the R&D battle to the other two.

Although SK Hynix has shown to be the fastest in their developments in the HBM space by a slight margin, Micron is a very close second, and in some areas already ahead. Their HBM3E is not only more power-efficient, 20% less power than competitors, it also delivers 50% more memory per stack thanks to its 12-high design. That’s a critical advantage in a world where memory capacity and thermal efficiency are becoming just as important as raw compute.

NVIDIA selected Micron as a primary source for their new GB200 Grace-Blackwell chips, and for the upcoming GB300 systems, making Micron one of the foundational vendors for next-gen AI compute. This endorsement didn’t come casually. NVIDIA would not bet their most important systems on a second-tier supplier.

Micron didn’t stop with NVIDIA. They’re also a key supplier for Google, AMD, as well as the other hyperscalers. Micron is now fully sold out of its HBM output for calendar 2025.

Here’s a quick comparison of the three main memory producers:

That kind of demand has triggered massive investment. Micron is responding by ramping CapEx to expand capacity and meet what it calls a multi-year demand inflection.

This isn’t speculative growth, it’s unmet demand that will sustain Micron’s growth for years.

Beyond HBM: Micron is riding the memory wave

Micron’s growth isn’t just about HBM. AI systems rely on a suite of memory technologies, and Micron is well-positioned in all of them. Data centers need DRAM for CPUs, GDDR6X for GPUs, and NAND flash for storing training datasets and serving large-scale models.

Here’s a breakdown of the core memory types powering AI, and Micron’s positioning in each:

Demand across the memory stack is surging. The rollout of DDR5 in servers is accelerating as AI workloads drive memory intensity. NAND remains essential for data-heavy AI use cases. And GDDR6X is increasingly used in high-performance graphics cards being repurposed for AI tasks.

What’s more, HBM itself tightens the DRAM supply-demand equation. Each HBM package consumes up to 3× more silicon than DDR5 to produce the same number of bits. Future versions like HBM4 will increase this to 4×. That means capacity used for HBM effectively constrains supply for other DRAM formats, helping to stabilize pricing in traditional segments.

US-based production is a long-term edge

Micron is the only U.S. company in the HBM oligopoly and holds the largest advanced memory manufacturing capacity in America. That’s a huge advantage in today’s geopolitical climate. The United States is the largest consumer of AI compute, and being U.S.-based gives Micron priority access, subsidies, and protection.

Micron is also leading the biggest chip project in U.S. history: a $100 billion investment to develop four fabs in upstate New York. The CHIPS and Science Act has already allocated $6.1 billion in funding to this expansion. Simultaneously, the company is constructing a R&D and manufacturing center in Boise.

This isn’t a short-term play. AI accelerator demand is expected to grow 10x over the next decade. But it doesn’t stop at data centers. Laptops, smartphones, and PCs will need more memory to run AI locally.

Inference is moving to the edge, and that means more memory everywhere.

The market is still mispricing Micron

Despite all of this, Micron trades at roughly the same level it did in November 2020, before ChatGPT even launched. Today it sits at $66, far below its 2024 highs of $142. Investors remain uncertain, afraid of the cyclical nature of memory, macro fears, and U.S.-China tensions.

But those concerns increasingly look exaggerated.

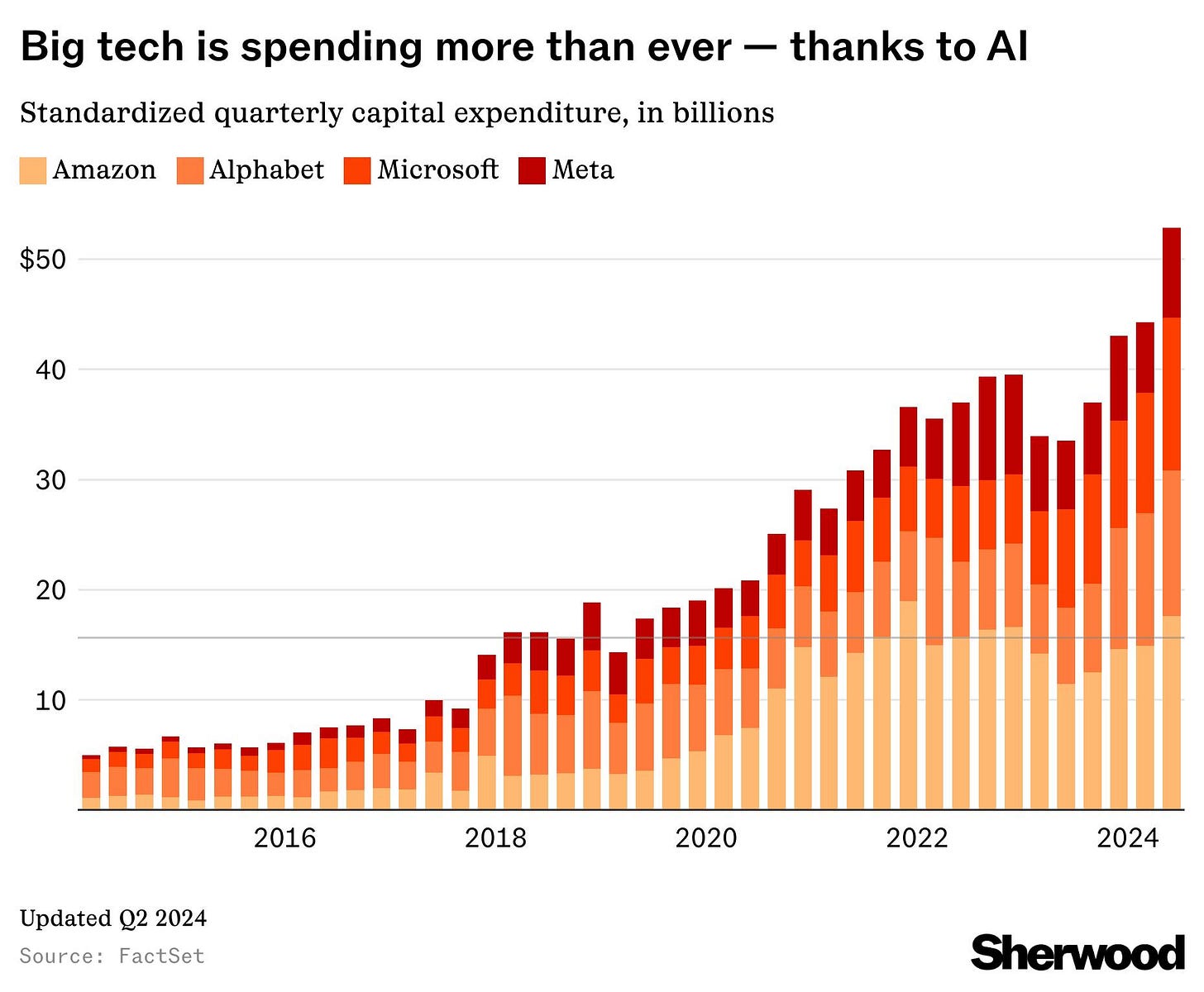

AI compute demand isn’t slowing. Executives at Microsoft and Amazon have made that clear. OpenAI just raised $40 billion, pushing its valuation to $300 billion. GPU cloud startups are proliferating. Everyone is scrambling to secure compute.

Some fear that emerging models from China or companies like DeepSeek will flood the market and slow growth. But this ignores the Jevons Paradox: the more productive AI becomes, the more infrastructure is demanded, as the ROI increases. And memory sits at the heart of that infrastructure.

Others are worried about geopolitical shocks.

Could a trade war cause a downturn?

Maybe. However, the global economy just survived a pandemic that locked down entire countries. Currently, employment remains strong and inflation is easing, with consumption and investment holding up well. If the world adapted to COVID, it will adapt to tariffs.

Also, most likely, deals will be reached, and the cycle will turn. Investors stuck in doom-and-gloom thinking are missing the bigger picture, which is that AI is here to stay, and that billions of people using AI require a ton of compute power, including memory.

So what’s the real risk in buying Micron?

Let’s break it down.

Will AI keep improving and boosting productivity? Yes.

Will AI capex continue to rise to meet demand? Yes.

Will billions of people eventually use AI daily? Most likely yes.

So what’s the real risk in buying Micron?

Some might say competition, or claim the stock was just overvalued before, therefore, now it’s just sitting at a fair price. However, when we get into the specific numbers and financials, the opportunity will look much clearer.

Let’s dig into the financials now

Strong financials that support high growth

Micron just posted the best earnings in its history. Operating cash flow reached an all-time high of $3.94 billion, representing 49% of revenue. This is a cash-generating machine. However, only $857 million translated into free cash flow due to a massive $3.1 billion in net capex during the quarter. This level of capital investment, paired with Micron’s leadership in cutting-edge technology and US-based manufacturing, forms the foundation for what could become a trillion-dollar giant.

Bloomberg Intelligence projects the HBM market to reach a $130 billion market size by 2033, with Micron holding a 23% market share.

That alone would translate into $30 billion in revenue, approximately Micron’s current annual revenue, but in a much higher-margin segment. On top of that, pricing power from shortages, improved margins from scale and US-based manufacturing, and growth in other segments like DRAM, NAND, and managed NAND, driven by data center expansion and the increasing memory needs of AI-enabled PCs and gaming, could push Micron toward $75 billion in revenue.