Can AMD beat NVIDIA?

NVIDIA has enjoyed a dominant leadership for years, but AMD is taking the necessary steps to end their reign

NVIDIA has long dominated the AI accelerator space. With 90 percent market share in AI data centers, a decades-long head start with CUDA, and powerful rack-scale systems like NVLink and NVSwitch, it has grown into the largest company in the world.

But 90 percent market share doesn't last forever, just ask Intel.

NVIDIA's dominance is beginning to show cracks. Supply has been tight for years, prices remain prohibitively high, and when a company squeezes its customers too hard for too long, they eventually start looking for alternatives.

That’s where AMD came in.

Now, AMD isn’t content with being the second plate, they want to become the main dish. And they’re making a strong case for themselves.

From laggard to contender

AMD was embarrassingly late to the AI race. While NVIDIA had the H100 and H200, AMD had nothing remotely competitive. Even when the MI300X was launched, software became the bottleneck. ROCm wasn’t open source, and the AI stack wasn’t ready.

The result? A distant second place.

But things have changed, and fast. AMD is no longer just fixing past mistakes, it's building a foundation that could threaten NVIDIA’s entire moat.

They’ve open-sourced ROCm, acquired Silo AI and Nod.ai, with Nod.ai now fully dedicated to improving ROCm. And they’re doubling the size of their AI software team every six months.

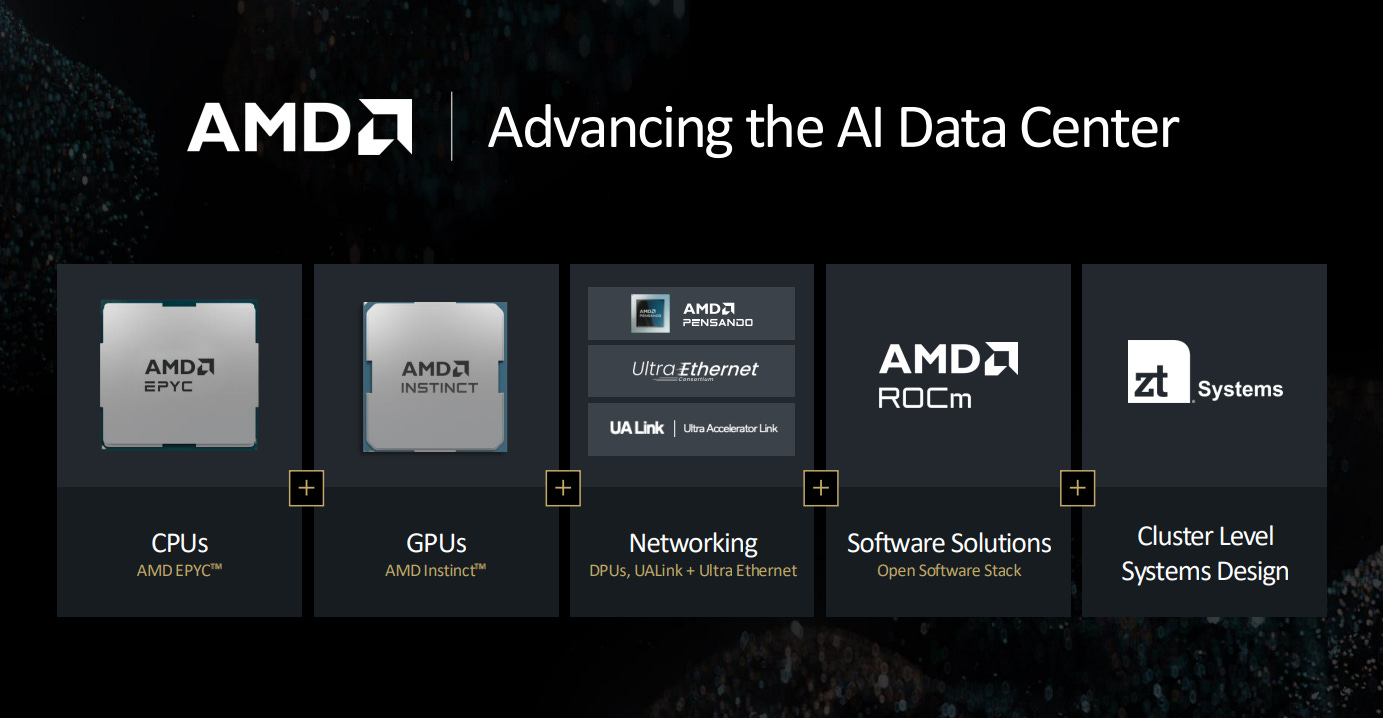

In terms of hardware, AMD is fast-tracking its accelerator chips. The MI355X and MI400 series are positioned to challenge and potentially overtake NVIDIA’s dominance. The acquisitions of Pensando, Xilinx, and ZT Systems are strategic moves that strengthen AMD’s ability to break through NVIDIA’s hold on the market.

This shift from hardware-first to platform-first is transforming AMD from raw ore into a polished diamond.

Beating NVIDIA in Inference

Inference will be the largest driver of compute demand for AI. This is a shared conclusion among industry experts, and some research suggests it could be ten times larger than the AI training market. With numbers like that, it has naturally become a major focus for AMD. This is a multi-trillion dollar industry in the making.

Think of all the inference required to run LLMs, personalized chatbots, image and video generation, and, most importantly, AI agents and robotics. AI will be embedded in every aspect of our lives, and it will require immense computing power. AMD is positioning itself as a leader in delivering the best performance.

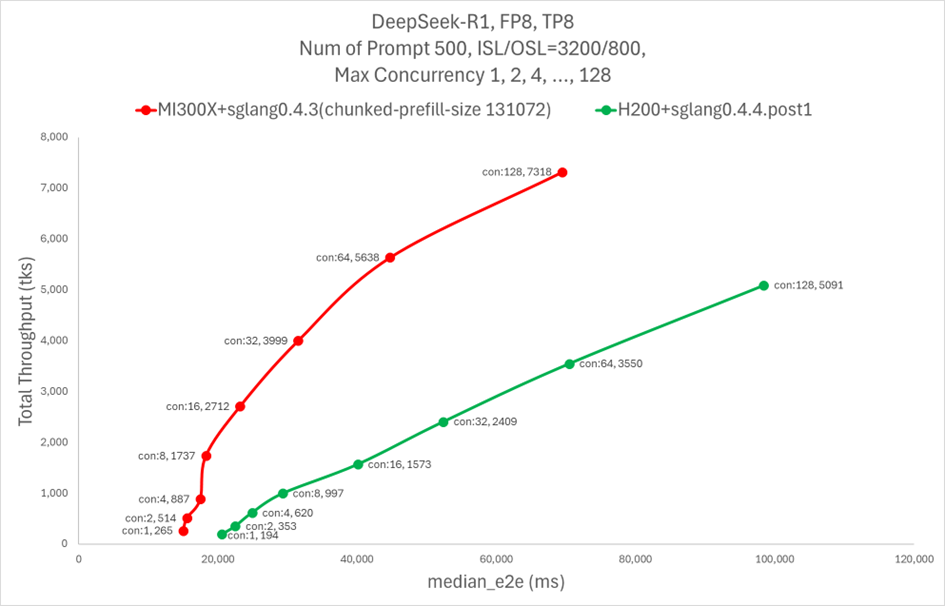

A good example is DeepSeek. AMD’s MI300X delivers over twice the DeepSeek throughput compared to NVIDIA’s H200, increasing from 6,484.76 to 13,704.36 tokens per second after AMD introduced AITER, their new library of optimized AI kernels. That’s the kind of performance improvement you rarely see from software alone.

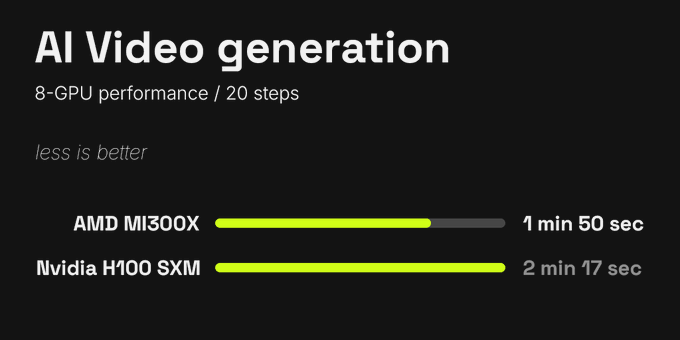

Another example comes from Higgsfield AI, one of the leading startups in video generation. Higgsfield reported a significant performance advantage with AMD chips over NVIDIA’s, observing 25 percent faster performance.

And considering both the performance gains and cost, Higgsfield found that AMD GPUs are 40 percent more cost-effective for video inference than NVIDIA's.

CEO Alex Mashrabov even stated:

"Jensen Huang mentioned that all pixels will be generated in the future, not rendered. What he didn’t realize is that they likely won’t be generated on NVIDIA GPUs, but on AMD GPUs instead."

These are just a few examples from Higgsfield’s tests, where the performance advantage of AMD is clear:

This kind of leap is what makes people pay attention. AMD’s hardware is closing the gap, and its software is catching up fast.

And this is just the beginning.

MI355X and MI400: Next-gen firepower

The impressive performance of the MI300X has driven strong demand for it, and for the upcoming MI325X. The MI300X is already being heavily used by Microsoft Copilot and Meta Llama, and it powers the most powerful supercomputer in the world, El Capitan. AMD also announced that their new MI325X accelerators are powering OpenAI’s ChatGPT-based Microsoft Copilot.

AMD’s accelerators are available across major cloud platforms including Oracle, IBM, Azure, and now DigitalOcean, which has also joined the party. Demand is also rising among independent AI cloud providers such as TensorWave, Vultr, Hot Aisle, and RunPod.

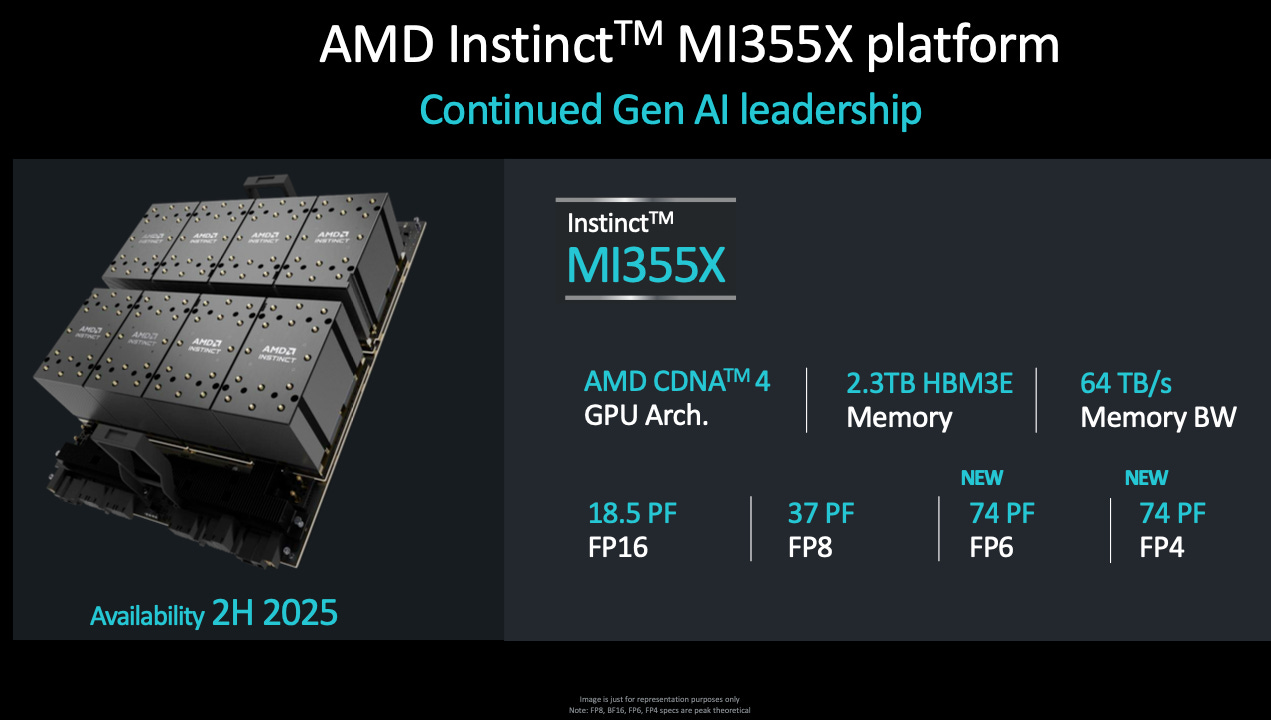

As successful as AMD’s previous accelerators have been, with the Instinct lineup becoming the fastest-growing product family in its history, the best is yet to come. Later this year, AMD will release the MI355X, which promises 35 times the inference performance of its previous generation. This chip is set to go head-to-head with NVIDIA’s Blackwell and Blackwell Ultra.

Oracle has already committed billions to a 30,000-GPU cluster using the MI355X, marking one of the biggest validations AMD has received in the AI arms race.

Looking ahead to 2026, AMD plans to launch the Instinct MI400 APU, built on an entirely new architecture and leveraging Xilinx technology. This could give AMD a major edge in programmable AI workloads across edge, embedded, and data center environments.

UALink: Destroying the NVLink moat

One of NVIDIA’s most underappreciated advantages is NVLink, their custom interconnect that enables massive GPU clusters with ultra-low latency and high bandwidth. It’s the backbone of the 72-GPU racks NVIDIA sells at premium prices.

But now, that moat is being directly challenged.

Enter UALink, a new open standard developed by a powerhouse consortium: AMD, Google, Microsoft, Meta, Apple, Broadcom, Amazon, Intel, and others. Based on AMD’s Infinity Fabric and Broadcom’s networking IP, UALink is designed to do everything NVLink does and possibly more.

The newly published UALink 1.0 spec supports:

Up to 1,024 accelerators in a single fabric

Up to 800 Gb/sec per port, configurable in x1, x2, or x4 links

Less than 1 microsecond round-trip latency at rack scale

Massive power and cost savings over Ethernet and PCIe

If it delivers as promised, UALink could end NVLink’s reign and potentially force NVIDIA to support an open standard. That would mark a major shift in AI data center dynamics, and AMD would be leading it.

Busting the CUDA moat

NVIDIA didn’t win just because of fast chips. It built a world-class software moat with CUDA, cuDNN, and tight integrations across the stack.

But AMD is catching up here too:

ROCm is now open source, giving AMD a major advantage in collaborating with the open source community and encouraging developers to adopt their chips.

The team is focused on inference optimizations for its AI accelerators. These improvements are already evident in the DeepSeek performance gains with AITER, and they’re now pushing harder than ever to deliver top-tier inference performance across all models and use cases.

AI kernel performance has doubled in just a few months.

Nod.ai is fully dedicated to optimizing AMD’s software stack.

AMD is pouring resources into software. Chris Sosa, Director of Engineering - AI Software at AMD, has announced a bold plan to double the size of their team every six months.

If AMD continues improving ROCm’s usability, fixing bugs, enhancing compatibility with machine learning libraries, and maximizing performance across supported systems, they’ll be able to deliver top-notch inference performance. If that happens, NVIDIA’s pricing power with CUDA could be seriously undermined, and AMD could capture a significant share of the massive inference market.

AMD needs scaling up

So far, AMD has lacked full rack-scale solutions. No NVSwitch equivalent. No UFM. No NVLink. That’s been a critical weakness.

But that’s about to change with:

ZT Systems is launching full-rack UALink-based server designs in 2025

AMD integrating Pensando DPUs and NICs into their rack systems

Upcoming MI355 and MI400 accelerators scaling seamlessly over UALink

These systems could match or even outperform NVIDIA’s DGX line, especially when paired with AMD’s superior chiplet expertise.

AMD got serious about AI too late. That’s why NVIDIA pulled so far ahead. AMD lacked a solid CUDA equivalent, strong accelerators, an NVLink-like interconnect, and a scalable rack and server solution with competitive performance.

But that’s changing this year. AMD is launching a new generation of chips based on a new architecture, starting with the MI355X. ROCm has improved dramatically, inference performance is now elite, and ZT Systems will deliver full server and rack solutions equipped with Pensando NICs and the first UALink specification, which has shown benchmark performance on par with NVLink.

Beyond data center: AI everywhere

AMD isn’t stopping at cloud GPUs. They’re building AI into everything:

Ryzen AI APUs are now available in laptops and desktops, combining CPU, GPU, and NPU in a single SoC, ideal for local LLM inference

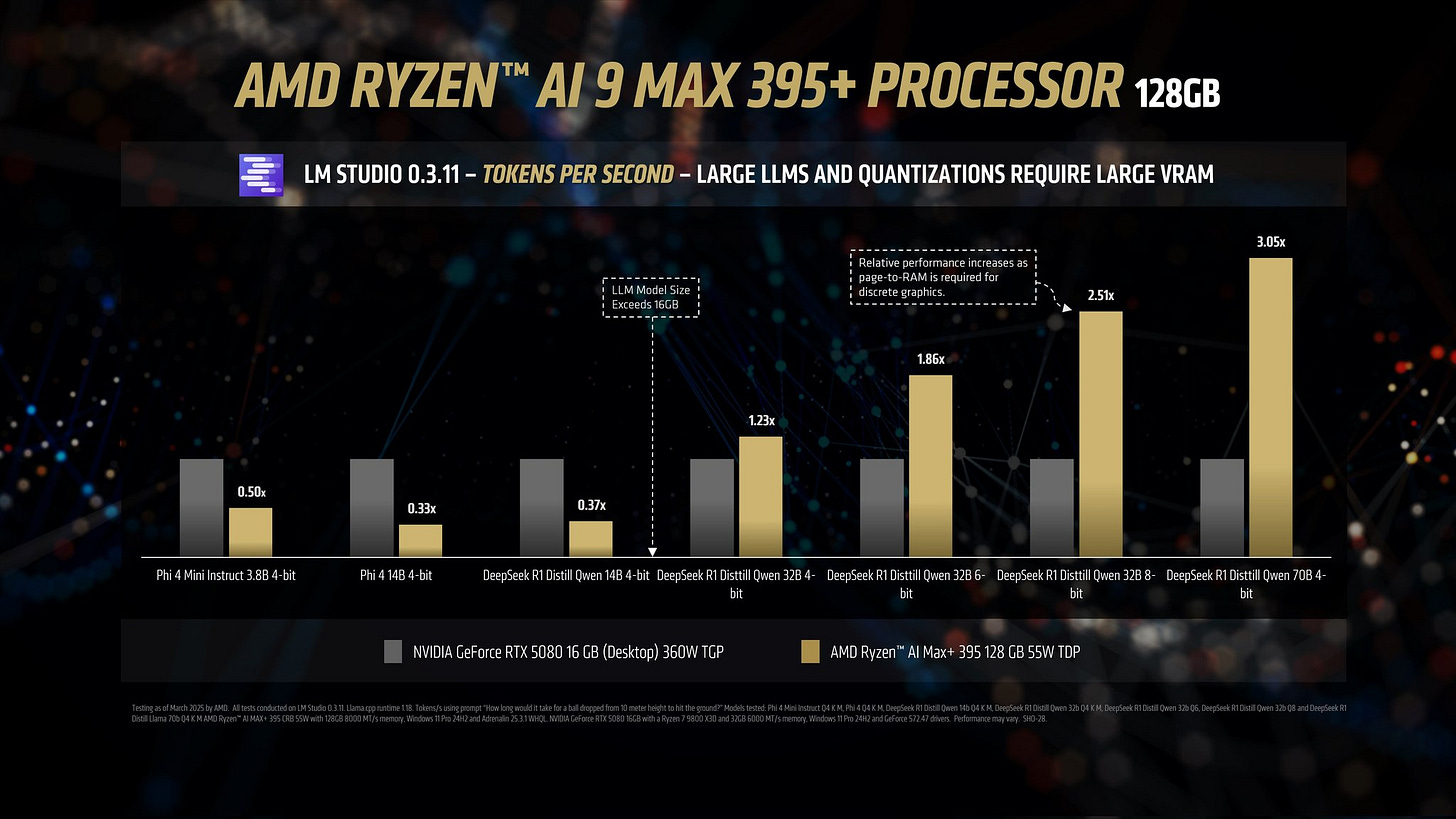

These new APUs have shown impressive performance across general-purpose computing, gaming, and AI inference. Strix Halo, the most powerful of the new Ryzen AI APUs, has benchmarked over 3× faster than the RTX 5080 in DeepSeek R1 AI benchmarks:

Strix Halo was chosen by Framework to power their new desktop computer, designed to compete directly with NVIDIA’s Project DIGITS. For just $2,000, it delivers tremendous specs at a significantly lower price than DIGITS, which is expected to launch at over $3,000.

It’s one of the most impressive products AMD has ever launched, and it offers strong optimism for what AMD can deliver at the data center level.

Xilinx FPGAs are being adapted for AI-specific edge workloads like drones, surveillance, 3D printing, and traffic systems

The upcoming MI400 APU will integrate FPGA flexibility directly into next-gen AI accelerators, giving AMD an edge in projects that require more personalized or adaptable solutions.

And let’s not forget, AMD now owns the world’s largest FPGA portfolio, thanks to the biggest semiconductor acquisition in history. That’s a latent strength Wall Street is still ignoring.

Xilinx: The Under-the-Radar Giant

Xilinx is the global leader in FPGAs, and its acquisition marked the largest in semiconductor history. FPGAs are hardware-programmable chips that can be adapted to a wide range of functions, making them incredibly versatile.

These chips are widely used in industries poised for massive growth, including aerospace, telecommunications, robotics, surveillance, drug discovery, and data centers. For example, one big client of AMD’s Versal FPGAs is Starlink, which started using them last year in their latest generation of satellites.

AMD is also integrating Xilinx technology into its NPUs. Their new Versal and Ryzen AI processors include an NPU built on the XDNA architecture, enhancing both compute capacity and power efficiency.

Looking ahead, FPGAs will strengthen AMD’s competitiveness in the data center space. The upcoming MI400 accelerator series will incorporate advanced Xilinx technology, and AMD has a wide range of possibilities to explore in the future.

As AMD continues to exploit the synergies with Xilinx, it not only sharpens its edge in chip architecture but also broadens its customer base, creating a moat that extends far beyond CPUs and GPUs.

AMD is on the right path

AMD is playing the long game. And it has a strong foundation to build on.

AMD currently offers the best CPUs on the market, across all tiers, including the data center, where EPYC CPUs now command close to 40% market share, making them the most chosen CPUs by hyperscalers. AMD also has more experience with chiplet architectures, giving it a critical advantage in building powerful SoCs for AI accelerators. Unlike NVIDIA, which has traditionally relied on monolithic designs, AMD holds the architectural edge.

Now, AMD is building on this base to become a leader in AI too.

They started late with the Instinct accelerator lineup, but now they’re accelerating at a pace the industry has never seen. The company plans to launch two new chips with two new architectures in under two years, an unprecedented cadence in the semiconductor world.

AMD has also expanded strategically through key acquisitions to challenge NVIDIA from every angle:

Xilinx, one of the world’s largest semiconductor firms, brings FPGA expertise that will help improve AMD’s entire product stack. It also opens AMD to fast-growing markets like space, defense, and robotics.

Pensando strengthens AMD’s position in systems, contributing NPUs and NICs that enable more efficient in-system networking and data movement.

ZT Systems, a server designer and manufacturer, is the final piece of the puzzle. With this acquisition, AMD can now integrate its chips, networking hardware, software, and server designs into fully optimized systems.

Conclusion: Keep an eye on AMD

Everything is coming together. And it all starts this year.

The MI355X accelerator will be the first to integrate Pensando DPUs and NICs, and it will debut in a proprietary server design, a major milestone. With this launch, AMD has a real opportunity to make a statement.

And in 2026, with the release of the MI400, AMD could go from contender to king.

NVIDIA’s market cap is currently 18 times higher than AMD’s, a gap that will become much harder to justify if AMD continues to execute on its roadmap successfully.

Insiders have started buying, signaling their optimism, and shareholders should feel the same. This is a multi-trillion dollar market, and it will create multiple multi-trillion dollar companies.

And there’s a good chance that AMD makes the list.

Nvidia won’t be beaten but AMD looks like a great catch up trade

In my view, AMD will eventually offer a full AI development platform. Chinese software firm DeepSeek has already shown that skilled engineers can write low-level optimizations to make traditionally “weaker” NVIDIA GPUs train AI models far more efficiently. I expect those same techniques to be applied to AMD hardware. In fact, George Hotz’s Tinygrad project is already hard at work commoditizing petaflop-scale training on GPUs—proof that open, high-performance AI stacks on AMD are within reach.