$AMD: The Next 10-Bagger?

Is AMD really undervalued? And if it is, how big is the opportunity? Everything you need to know is covered in this article

10-bagger is a term that’s often thrown around carelessly when it comes to stocks. It almost feels like any company with momentum gets hyped as the next 10-bagger. AMD is the opposite of that. Although the company’s results are excellent and its fundamentals look cheap, especially considering the massively expanding TAM, the stock is still down 47% from its all-time highs.

NVIDIA is overshadowing AMD’s major AI efforts, and the market isn’t recognizing how AMD is steadily taking over the CPU market that was once dominated by Intel.

However, as much as AMD might seem like the ugly duckling in the AI stock space, one thing is clear: this company is laying the groundwork to become both a hardware and software giant, and a key player in a world where compute power has become the most valuable resource.

How does a $180 billion company become a 10-bagger? By attacking a multi-trillion dollar market. And to do that, AMD is undergoing a fundamentally transformative process that could turn it into a $2+ trillion company.

Let’s break down the different parts and products of this company to understand the full opportunity. But first, let’s look at the compute market, and its central role in this new technological era.

The Need for Compute

As I’ve explained in this article, I believe the AI era represents a $195 trillion opportunity for the industry over the next decade. This revolution will be even bigger and more important than most people realize. The companies that position themselves well in this hypergrowth cycle will see massive returns for their stocks. I believe AMD is among the best-positioned players, with the potential to be at the core of the AI world.

They offer the best general-purpose processors (CPUs), and their AI accelerator lineup is likely the strongest at the chip level for inference. Soon, they’ll introduce rack-scale AI accelerator systems. They’re also the leaders in the FPGA space, a category of highly programmable chips used in AI, robotics, aerospace, and more. And through innovations in APUs and NPUs, AMD is transforming the small-scale AI landscape.

But it’s not just about hardware. AMD is also turning a past weakness into a strength: software. They’ve rapidly improved their ROCm system, acquired the largest AI lab in Europe, and through their purchase of Nod.ai and Mipsology, have reshaped their software team, making AMD a critical force in the open-source AI community.

Let’s explore in detail what AMD has to offer the AI revolution, and how this $180 billion market cap company can become a multi-trillion-dollar behemoth.

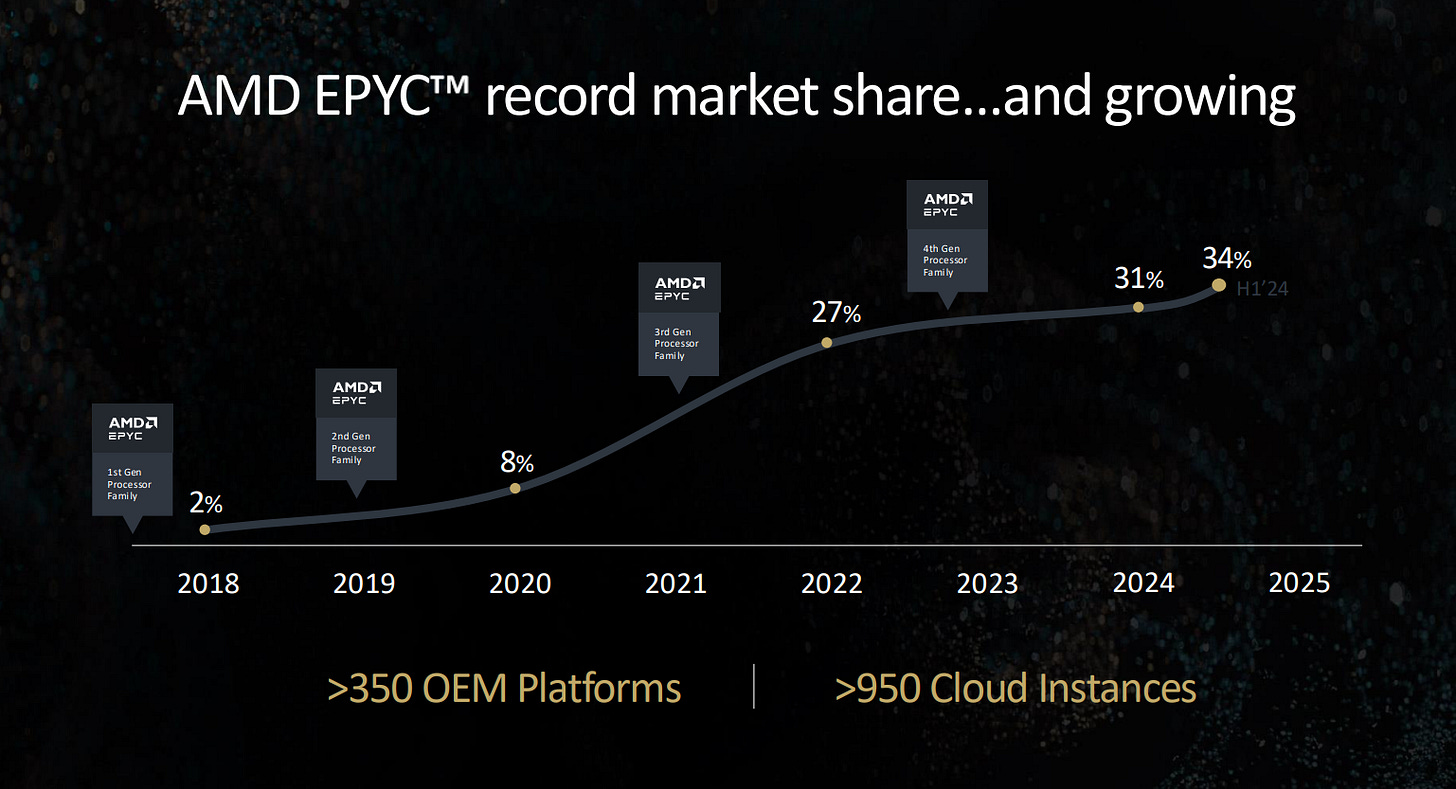

CPUs

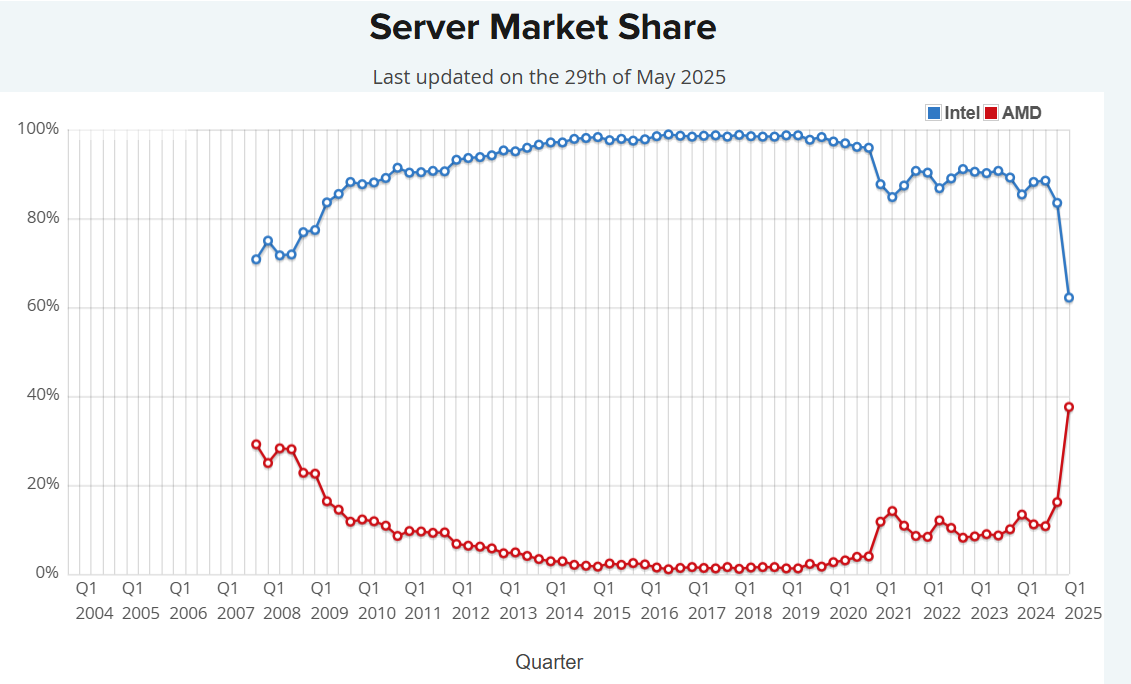

Let’s start with the core of any computer. While CPUs no longer hold the prestige they once did, they remain essential for general-purpose computing, a market with a multi-trillion dollar TAM, and are present in every AI data center, working alongside GPUs. In the growing AI data center space, AMD holds a dominant market share thanks to its EPYC processors. AMD’s share of the server CPU market rose from just 2% in 2018 to 34% in 2024. Among hyperscalers, AMD is now the #1 CPU provider.

This achievement is far from trivial. It’s the result of consistently offering best-in-class technology and innovating faster than any competitor. EPYC processors are so powerful and efficient that some companies even requested NVIDIA to collaborate with AMD, creating hybrid systems without ARM CPUs, instead using AMD’s EPYC chips.

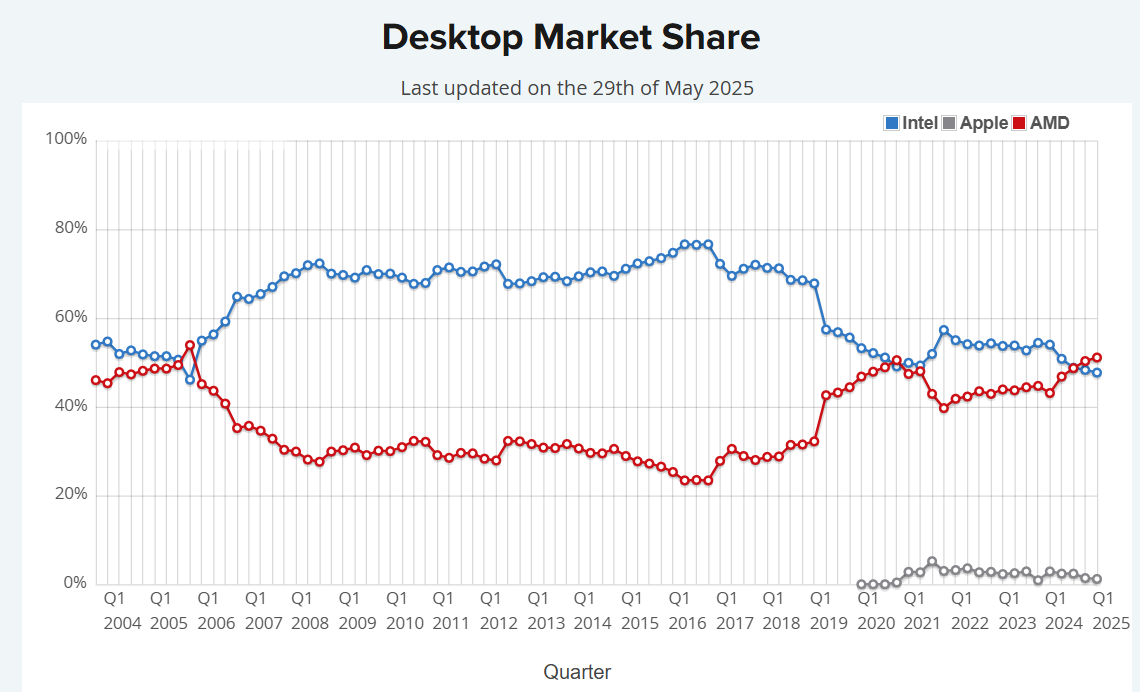

A market once entirely dominated by Intel is now being systematically overtaken by AMD. It’s a testament to how a former underdog can become a leader. Lisa Su has proven she can execute long-term strategies to achieve these kinds of transformations, and a similar narrative may unfold in AMD’s battle against NVIDIA in the GPU space.

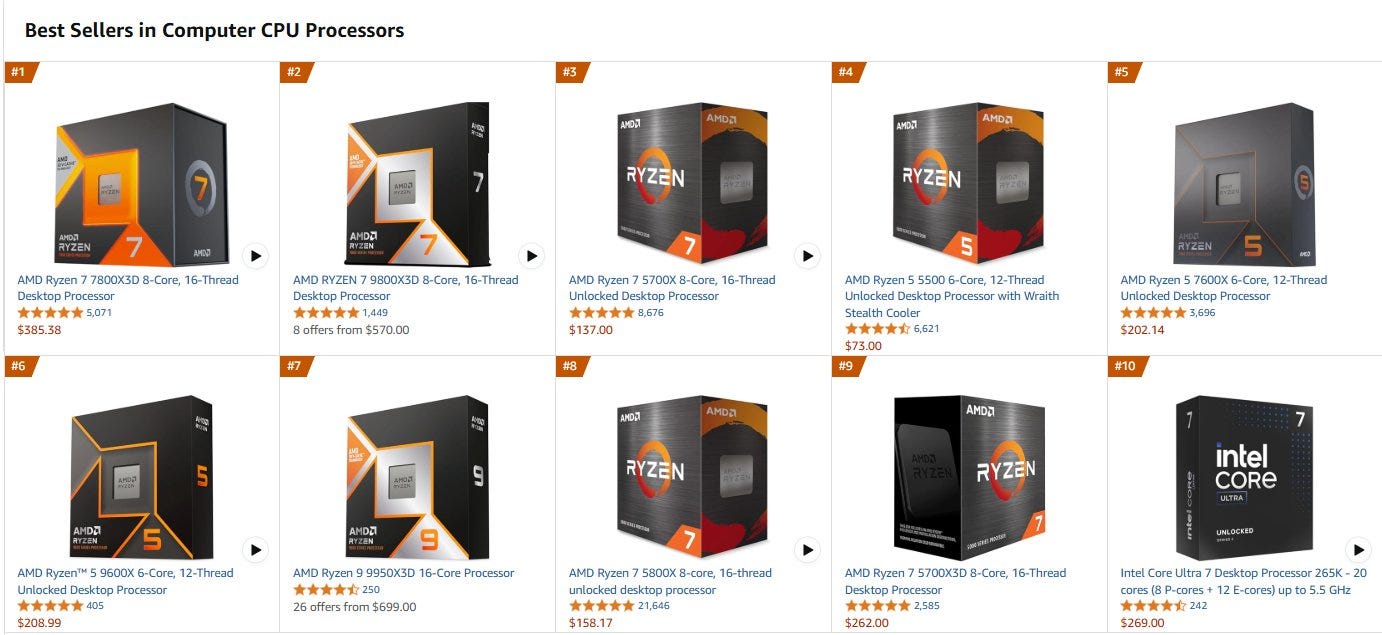

In the retail CPU market, AMD is again steadily climbing to the top. As of now, 13 of the 15 best-selling processors on Amazon US are AMD’s. In March, AMD reportedly captured nearly 80% of the CPU market on Amazon US, generating five times more revenue than Intel. In Germany, AMD holds over 90% market share in both retail CPUs and GPUs.

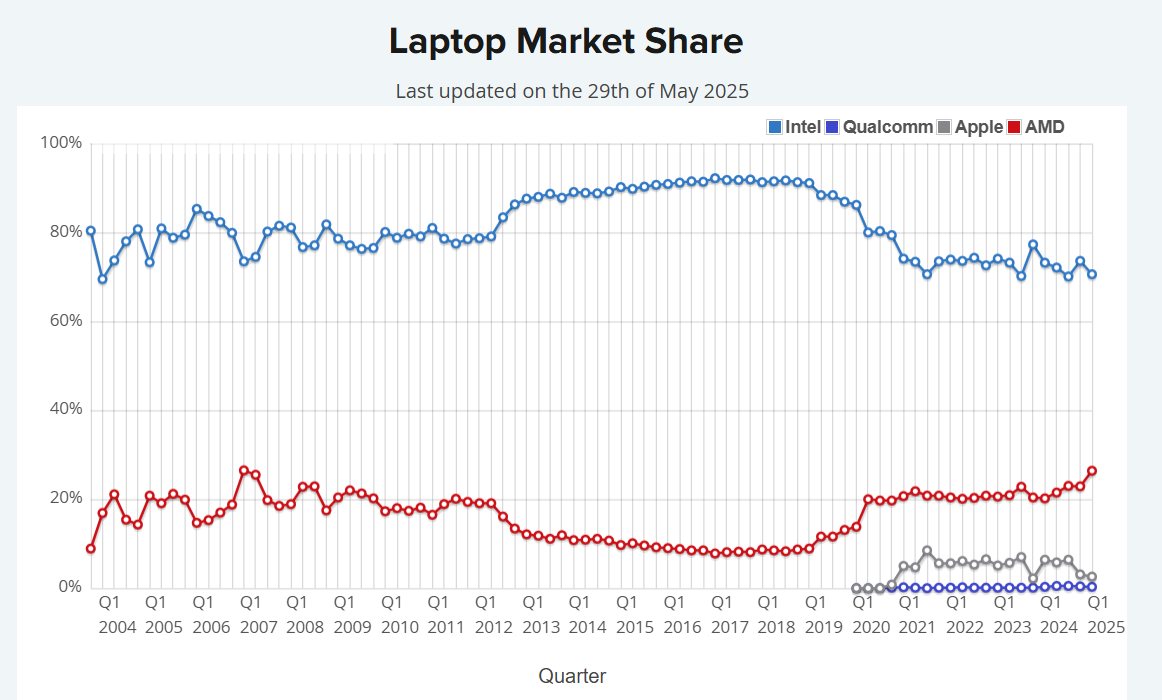

Whether it’s desktop, laptop, or server, the trend is clear: AMD is outperforming Intel across the board, and this growing dominance will ultimately be reflected in both financial results and stock performance.

Their weakest area has been laptops, but that’s changing fast. Starting this year, AMD announced the launch of the first Dell commercial PCs powered by AMD Ryzen AI PRO processors. Dell, the third-largest laptop vendor, had previously offered only Intel chips. This new partnership will allow AMD to accelerate market share gains in the laptop segment as well.

AI Accelerators

Although NVIDIA is worshipped as the indisputable leader in this space, many investors have become overconfident when evaluating their moat. They assume NVIDIA will always lead, and that AMD will only absorb excess demand. But that’s a flawed view.

In reality, AMD is aiming to take over the entire space. They’re not fighting for scraps, they’re actively trying to deliver the best AI system solution on the market.

Inference has been their main focus, and for good reason. The consensus among AI experts is that inference will become a market ten times larger than training, and AMD’s chips excel in this area.

The Inference Edge

Inference will be the largest driver of compute demand in the AI era. This is a shared conclusion among industry experts, with some research suggesting it could be ten times larger than the training market. With numbers like that, it has naturally become a strategic focus for AMD. We’re talking about a multi-trillion dollar industry in the making.

Think about all the inference needed to run LLMs, personalized chatbots, image and video generation, and, most critically, AI agents and robotics. AI will be embedded in every part of our lives, and it will require immense computing power. AMD is positioning itself as a leader in delivering that power.

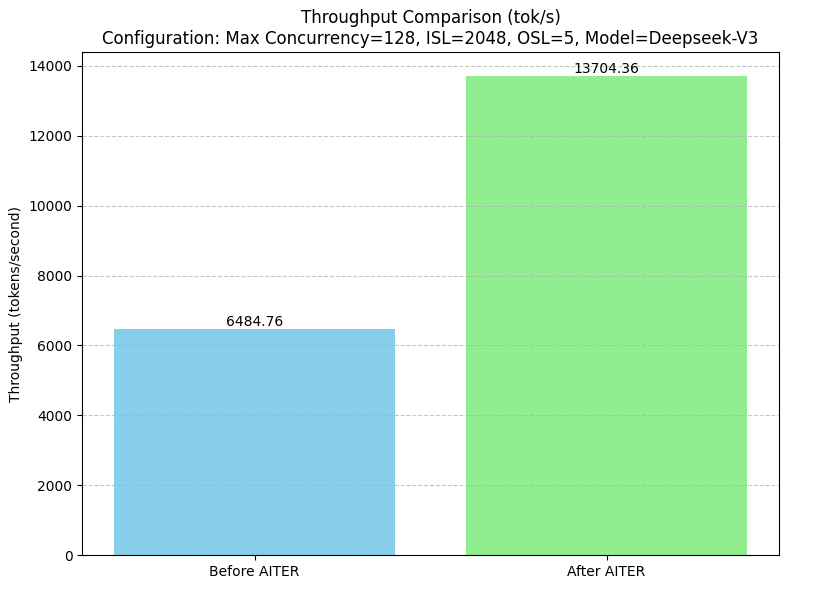

A strong example is DeepSeek. AMD’s MI300X delivers over twice the throughput compared to NVIDIA’s H200, going from 6,484.76 to 13,704.36 tokens per second after the release of AITER, AMD’s new library of optimized AI kernels. That’s the kind of software-driven performance leap you rarely see.

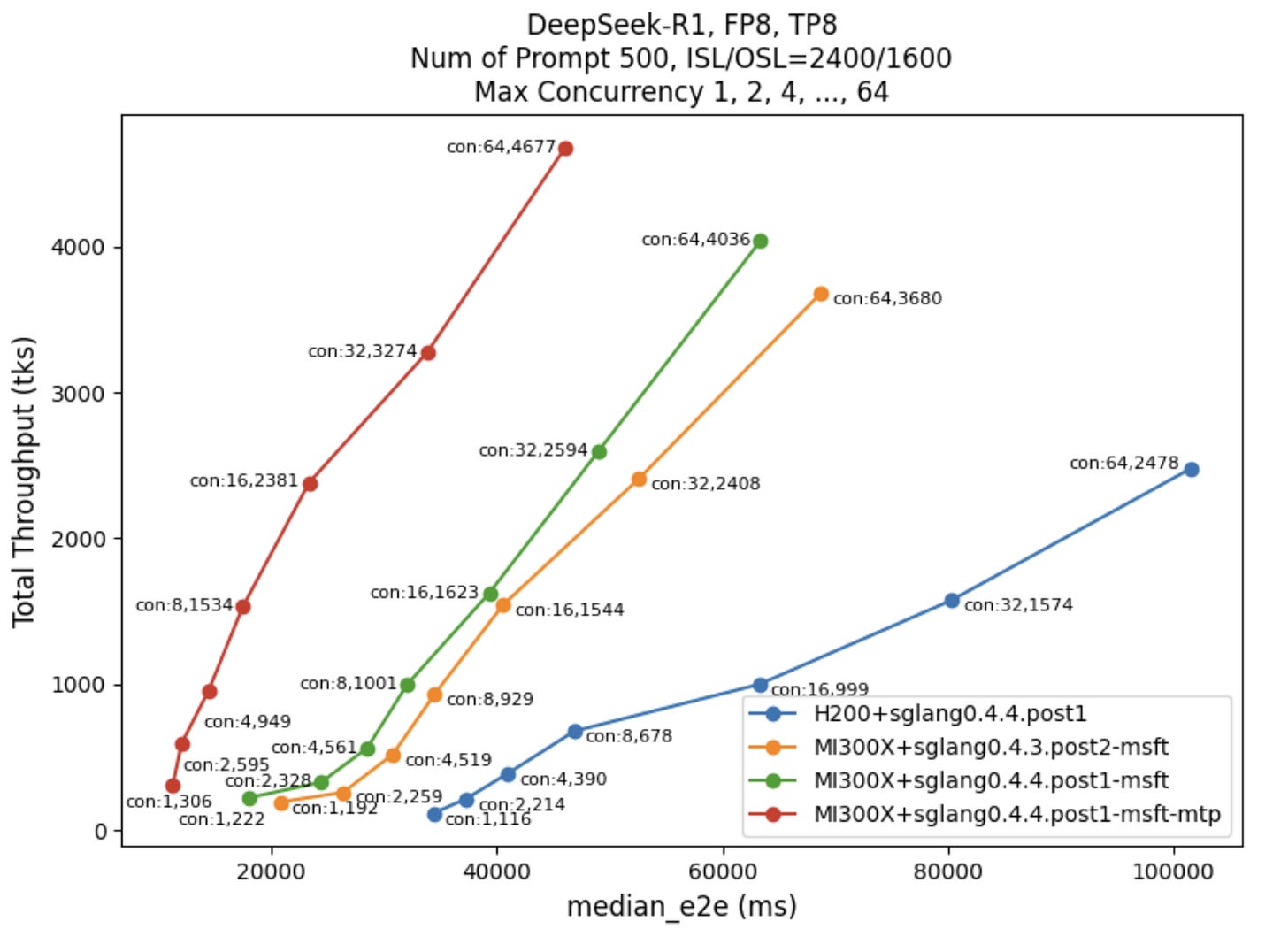

These vast improvements have enabled AMD to surpass NVIDIA in certain inference workloads. One example is their collaboration with Microsoft, which has been actively seeking alternatives to NVIDIA. Microsoft is now working closely with AMD to optimize software and enhance system performance.

Thanks to these recent optimizations, AMD’s accelerators have significantly outperformed in DeepSeek inference tasks:

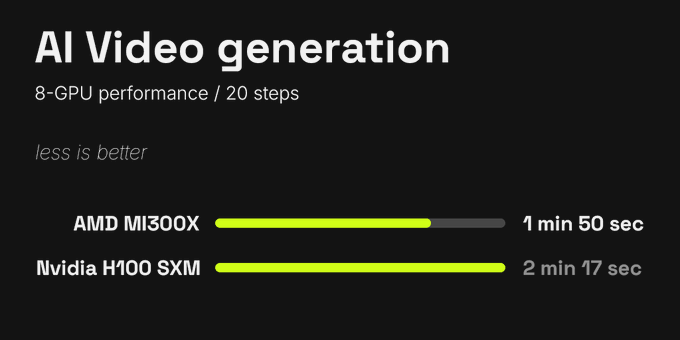

Another case is Higgsfield AI, one of the top startups in video generation. They reported a 25% performance advantage with AMD chips over NVIDIA’s. And when factoring in cost, AMD GPUs were found to be 40% more cost-effective for video inference workloads.

As CEO Alex Mashrabov stated:

“Jensen Huang mentioned that all pixels will be generated in the future, not rendered. What he didn’t realize is that they likely won’t be generated on NVIDIA GPUs, but on AMD GPUs instead.”

These are just a few examples where AMD’s performance edge is becoming clear:

This is the kind of shift that makes people pay attention. AMD’s hardware is closing the gap, and its software is catching up fast.

And this is just the beginning.

The impressive results from the MI300X have sparked strong demand, both for it and for the upcoming MI325X. The MI300X is already powering Microsoft Copilot, Meta Llama, and the El Capitan supercomputer, the most powerful in the world. AMD also confirmed that its new MI325X accelerators are being used to power OpenAI’s ChatGPT-based Microsoft Copilot.

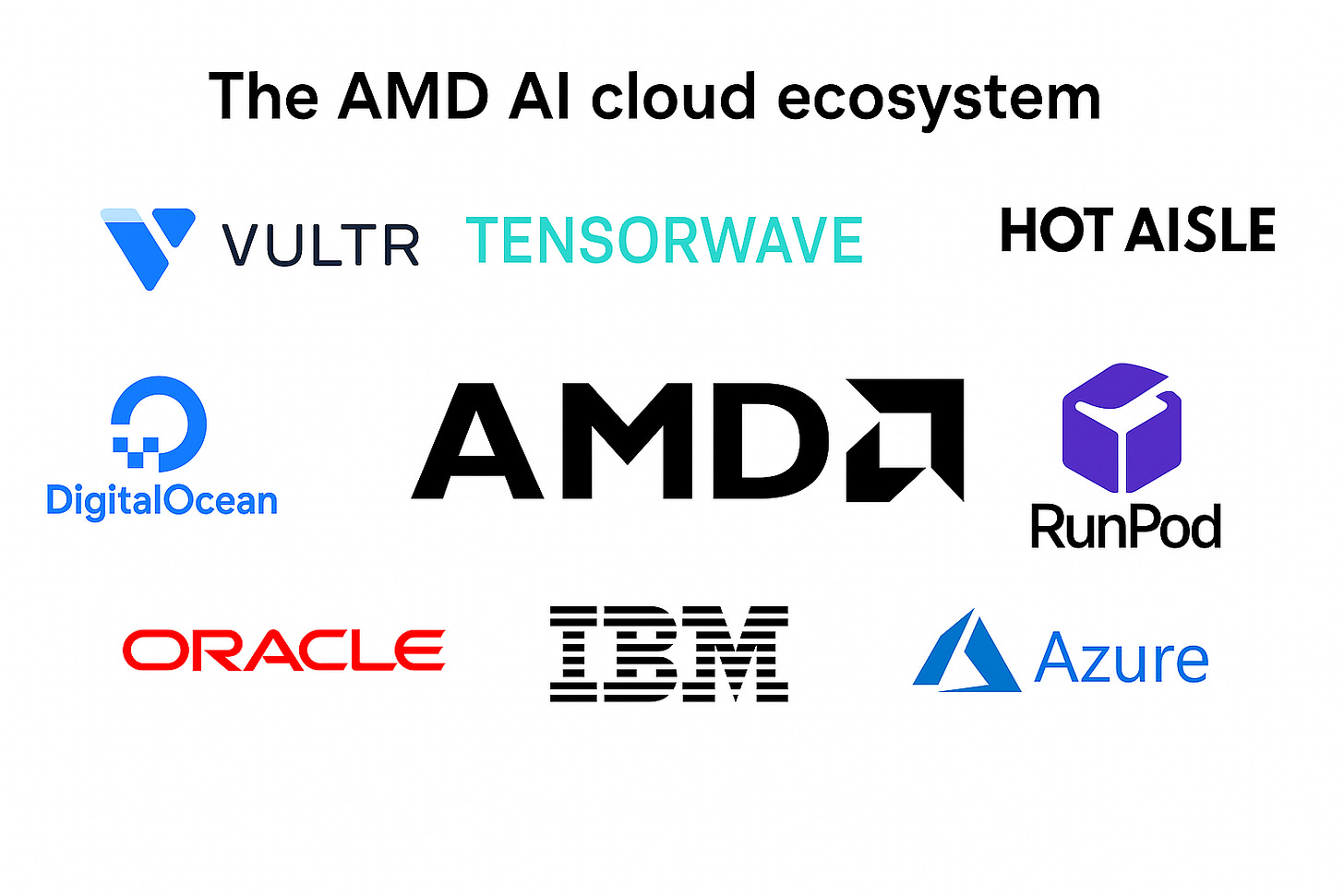

AMD’s AI accelerators are now available across major cloud platforms, including Oracle, IBM, Azure, and DigitalOcean, which recently joined in. Demand is also growing among independent AI cloud providers like TensorWave, Vultr, Hot Aisle, and RunPod.

As successful as AMD’s Instinct lineup has been, becoming the fastest-growing product family in the company’s history, the best is yet to come. Later this year, AMD will release the MI355X, which promises 35× the inference performance of its previous generation. It’s set to go head-to-head with NVIDIA’s Blackwell and Blackwell Ultra.

There are already a few success stories, and this is just the beginning:

Oracle has committed billions to a 30,000-GPU cluster using the MI355X, one of AMD’s most significant wins in the AI arms race.

On the partnership front, Fujitsu has joined forces with AMD to build a hybrid system combining Fujitsu’s upcoming 2nm ARM processor with AMD’s AI accelerators, expected to launch in 2027.

Saudi Arabia is also becoming a key AMD partner. Through a new $10 billion joint venture with Humain, the Kingdom’s state-owned AI company, AMD will help build a global AI hyperscaler with plans to deploy 500 MW of compute power over the next five years.

TensorWave, a new AI neocloud, has raised $100M to build a liquid-cooled $AMD GPU cluster.

Attacking NVIDIA’s Moat

However, AMD’s biggest challenge remains scalability.

NVIDIA’s dominance doesn’t just come from offering AI accelerators or CUDA, it comes from being the first to offer rack-scale systems with the ability to interconnect dozens of GPUs. Their NVLink and NVSwitch technologies provide unmatched interconnect performance, making NVIDIA the gold standard for large-scale compute efficiency.

That’s what AMD is now aiming to change.

The MI355X system will be AMD’s most powerful and scalable platform yet, but the real ambition lies in the upcoming MI400 series, which aims to connect more GPUs than NVIDIA’s equivalent systems, creating the most efficient rack-scale solution for inference and liberating thousands of companies from the extractive NVIDIA monopoly.

To compete, AMD is collaborating with industry titans on a new open interconnect standard called UA Link. NVIDIA’s NVLink gave them a massive edge, but that edge may soon disappear.

The UA Link Consortium, which includes AMD, Google, Microsoft, Meta, Apple, Broadcom, Cisco, Astera Labs, Amazon, Intel, and others, has already released the UALink 1.0 spec, which early tests show matches NVIDIA’s performance.

UALink 1.0 supports:

Up to 1,024 accelerators in a single fabric

Up to 800 Gb/sec per port, configurable in x1, x2, or x4 links

Sub-microsecond round-trip latency at rack scale

Massive power and cost savings vs Ethernet and PCIe

If UALink delivers on these promises, it could end NVIDIA’s interconnect monopoly and force them to adopt open standards. That would mark a fundamental shift in the AI data center landscape, with AMD leading the charge.

The world’s biggest tech companies want this. Nobody wants to depend on a single vendor, and that’s where AMD becomes a crucial alternative.

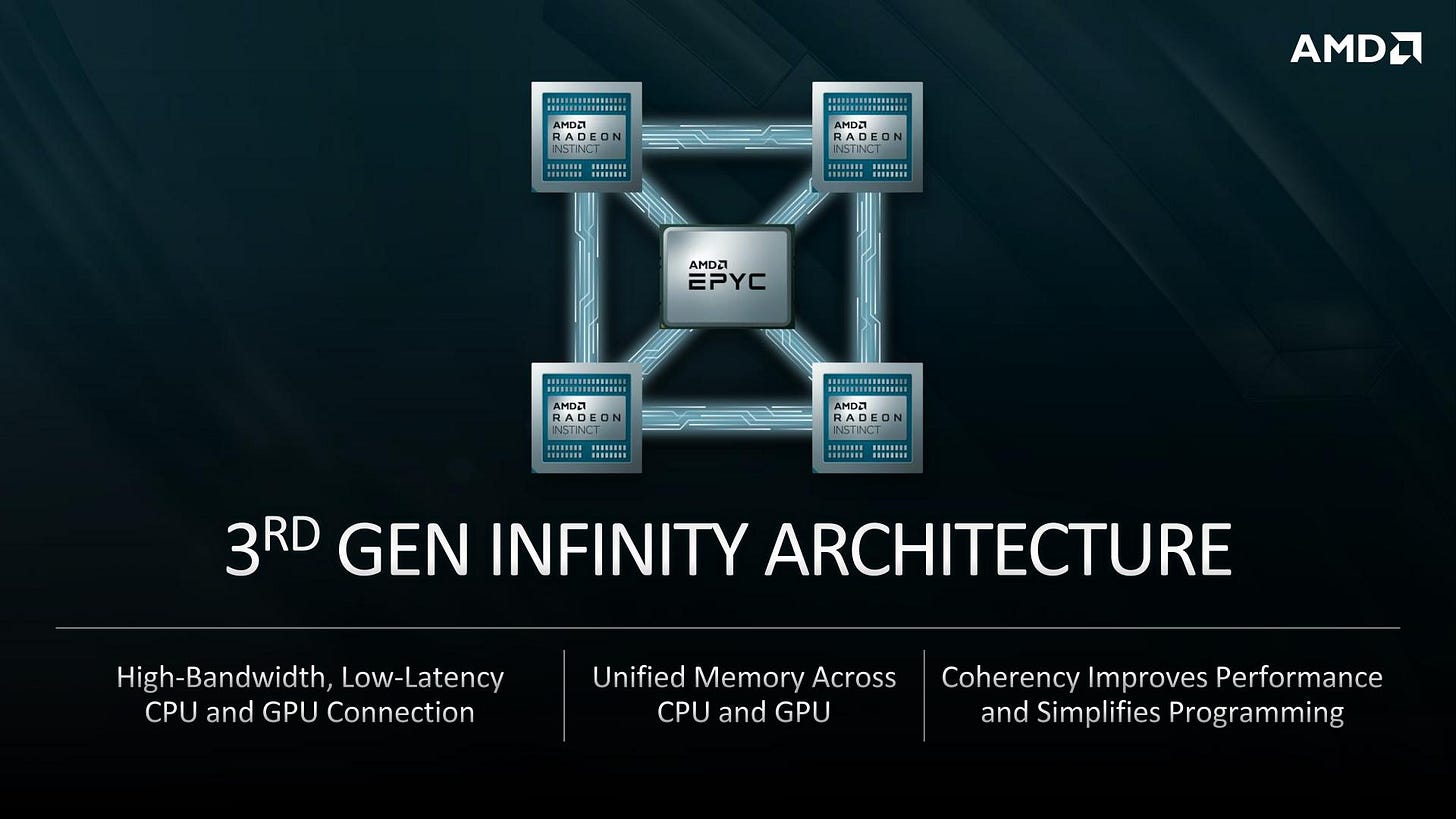

UALink is built on AMD’s Infinity Fabric, combined with the networking IP and expertise of Broadcom and Astera Labs, creating a best-in-class interconnect solution.

One Portfolio to Rule Them All

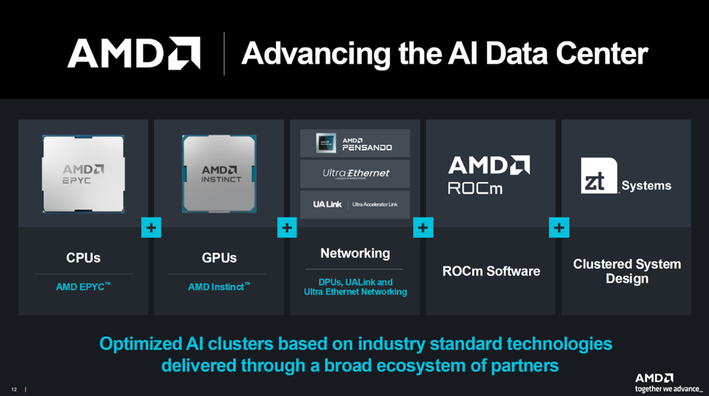

To offer a complete rack-scale AI compute system, AMD acquired Pensando, a company that develops DPUs (Data Processing Units) and software-defined services that offload networking, storage, and security tasks from the CPU. This improves:

Application performance

Infrastructure efficiency

System security

Cost structure

Pensando’s platform acts as a programmable co-processor for cloud infrastructure, handling:

Firewalls

Load balancing

Encryption

Network telemetry

Storage services

This allows CPUs and GPUs to focus purely on AI inference and customer workloads.

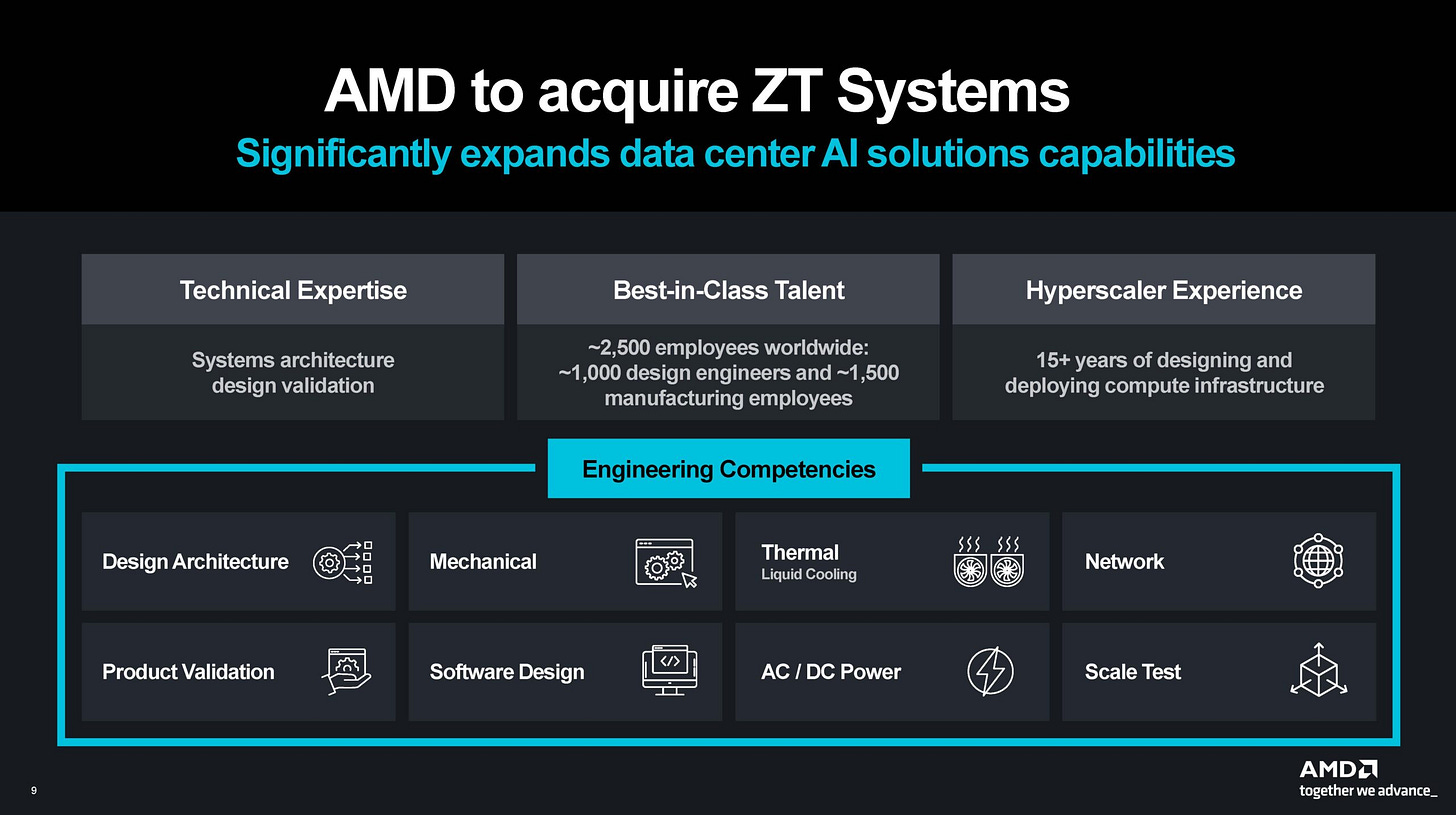

To complete the stack, AMD also acquired ZT Systems, a server and rack designer and manufacturer. This gives AMD the engineering talent, IP, and design capabilities needed to build complete systems that integrate:

EPYC processors

Instinct AI accelerators

Pensando DPUs

UA Link interconnect hardware

For years, AMD lacked full rack-scale systems, no NVSwitch equivalent, no UFM, no NVLink. That was a critical shortcoming.

Now, that’s changing:

ZT Systems will launch full-rack UALink-based servers in 2025

AMD is integrating Pensando DPUs and NICs into its server designs

The MI355X and MI400 series will scale across UA Link fabrics

These upcoming systems could match or even surpass NVIDIA’s DGX line, especially when paired with AMD’s unmatched chiplet architecture expertise.

Beyond That: MI400 Series and Rack-Scale Supremacy

The MI400 series will take things to the next level. It doesn’t just aim for large-scale deployment, it aims to become the largest-scale AI system in the world.

In H2 2026, AMD will release the MI450X, its first-ever large rack-scale architecture.

It uses their proprietary Infinity Fabric protocol over Ethernet for the scale-up domain to connect 128 GPUs.

By comparison, NVIDIA's H2 2026 offering will only connect 72 GPU packages in the same domain.

This means AMD could have a 72% advantage in scale-up world sizes.

Beating NVIDIA in their own domain would be unprecedented, and could turn AMD from the underdog to the leader.

Pushing the Frontier: Photonics and Next-Gen AI

To finalize, AMD is also laying the foundation for next-gen AI accelerators. Just two days ago, AMD acquired Enosemi, a Silicon Photonics startup.

This elite team will immediately scale AMD’s ability to develop photonics and co-packaged optics solutions across next-gen AI systems.

The Enosemi team, based in Silicon Valley, consists of PhD-level talent with a proven track record of building and shipping photonic integrated circuits at volume, a rare and powerful capability.

Their depth of experience, technical rigor, and execution track record make them an ideal fit for AMD as it pushes deeper into high-performance interconnect innovation.

A Full-Stack AI Giant in the Making

After acquiring Xilinx, Pensando, ZT Systems, and Enosemi, AMD is now uniquely positioned to offer a full-stack AI system, controlling every piece of the puzzle to ensure the highest quality and performance.

With this portfolio, AMD isn’t just looking to take part, it’s looking to take over the AI accelerator space.

APUs

AMD is the inventor and global leader in Accelerated Processing Unit (APU) technology. APUs are systems-on-a-chip (SoCs) that integrate a CPU and GPU on the same die. More importantly, AMD’s latest APUs also include a powerful Neural Processing Unit (NPU) designed specifically for AI tasks. The NPU offloads AI workloads from the CPU, allowing systems to run faster and more efficiently.

Their most advanced APU is Strix Halo, part of the Ryzen AI Max series. This processor is redefining the PC landscape and proving to be an ideal solution for running AI inference locally.

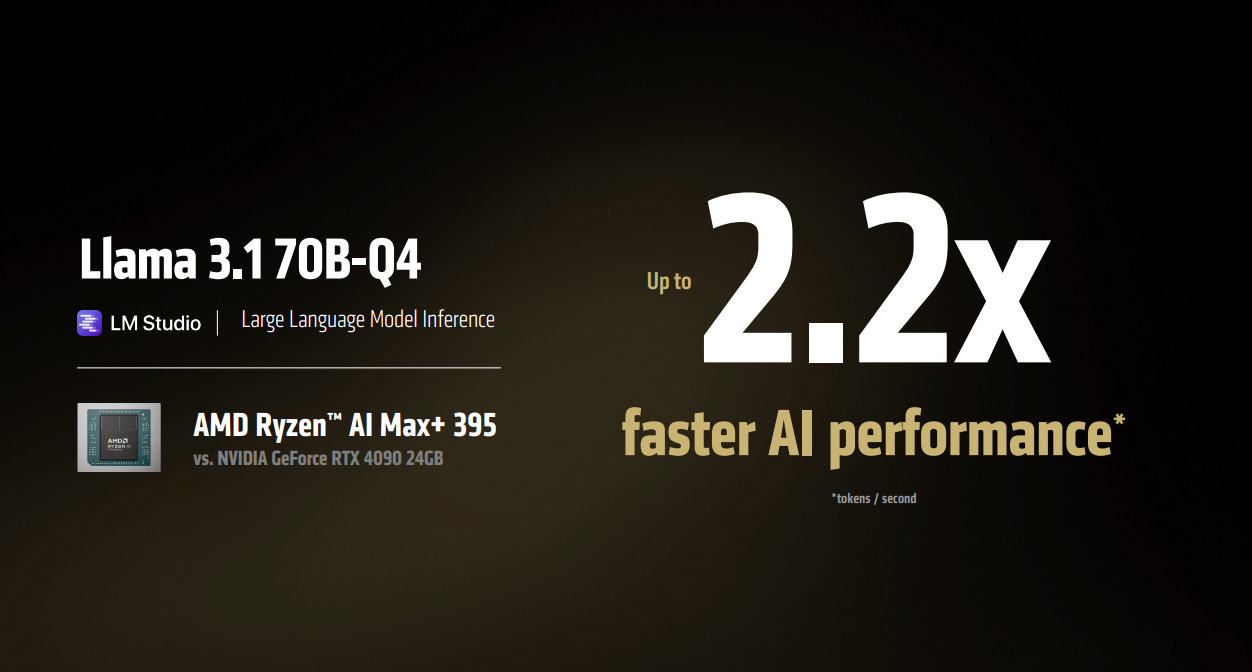

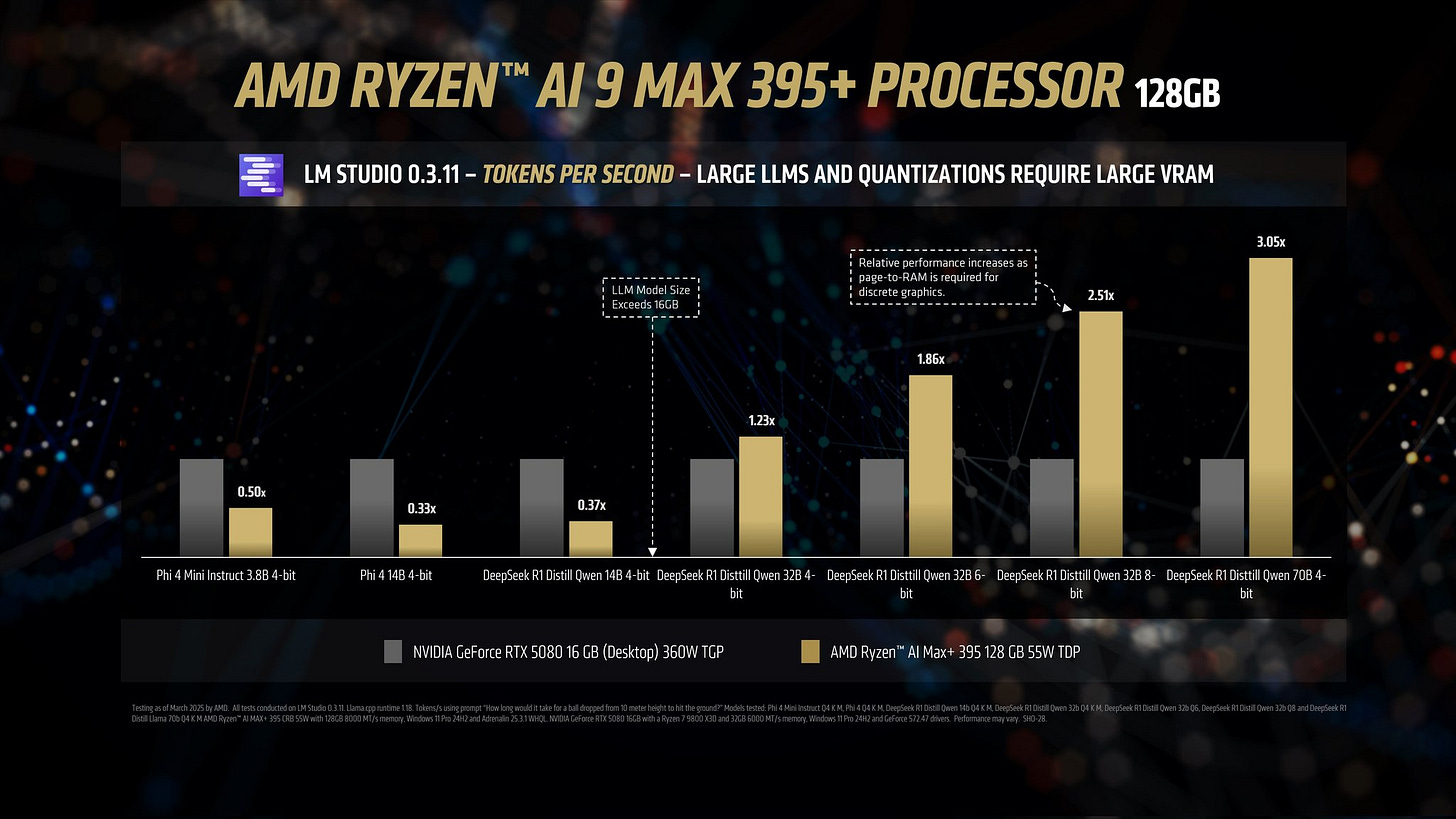

These new APUs deliver impressive performance across general-purpose computing, gaming, and AI inference. Strix Halo, the flagship of the Ryzen AI lineup, has benchmarked over 3× faster than the RTX 5080 in DeepSeek R1 AI benchmarks.

It directly competes with NVIDIA’s Project DIGITS (which hasn’t launched yet). And through partners like Framework, AMD is offering desktop supercomputers that deliver the best-proven local AI inference performance in the world. For just $2,000, these systems offer tremendous specs at a significantly lower price than DIGITS, which is expected to launch at over $3,000.

FPGAs

FPGAs (Field-Programmable Gate Arrays) are a type of chip that can be reprogrammed at the hardware level after manufacturing. Their versatility and high degree of customization allow them to be optimized for specific workloads, making them ideal for a wide range of industries.

They’re used in aerospace, AI inference, drones, 3D printing, defense, robotics, telecommunications, and financial services. When AMD acquired Xilinx, the world’s largest FPGA company, it marked the biggest acquisition in semiconductor history.

This deal didn’t just expand AMD’s total addressable market, it gave them exposure to promising verticals like space exploration, robotics, drones, advanced machinery, and satellite communications. For example, one major client of AMD’s Versal FPGAs is Starlink, which began using them last year in its latest generation of satellites.

AMD is also integrating Xilinx technology into its NPUs. The new Versal and Ryzen AI processors feature NPUs built on the XDNA architecture, which significantly enhance compute capacity and power efficiency.

Looking forward, FPGAs will further strengthen AMD’s competitiveness in the data center space. The upcoming MI400 accelerator series will reportedly incorporate advanced Xilinx technology, providing new inference performance advantages.

Beyond that, the Xilinx acquisition gave AMD not only cutting-edge IP but also a world-class engineering team, one that’s already contributing to improvements across multiple AMD product lines.

Chiplets: The Ultimate Advantage

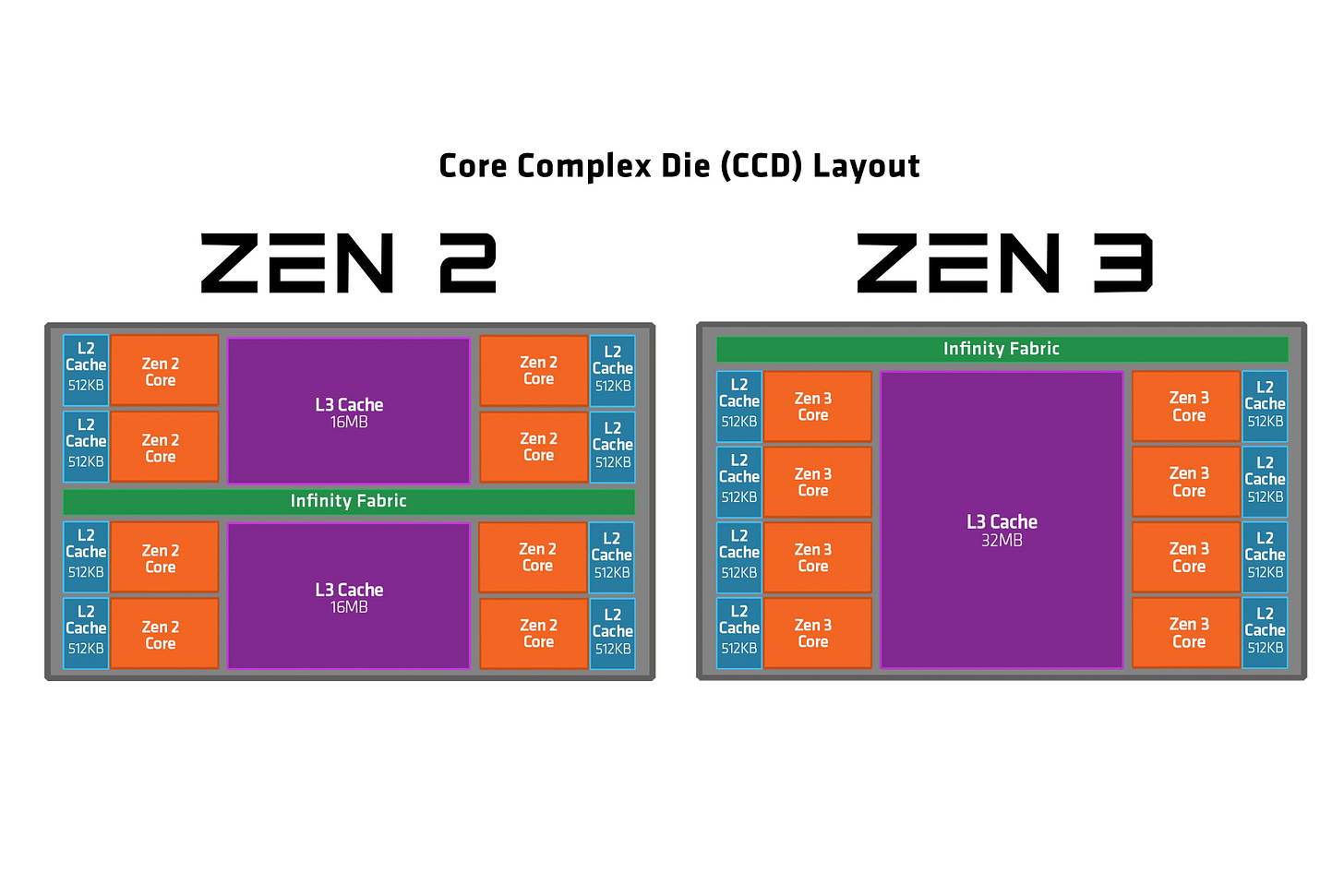

For many years, computer chips were built as a single slab of silicon, known as a monolithic die. But as chips became more powerful and complex, this method turned increasingly difficult and expensive. That’s where chiplets come in. Instead of building one large chip, companies now design smaller modules, called chiplets, which are connected within a single package. This makes manufacturing more efficient and flexible.

Several companies now use chiplets, but AMD was the first to bring them to the mainstream and still leads the field.

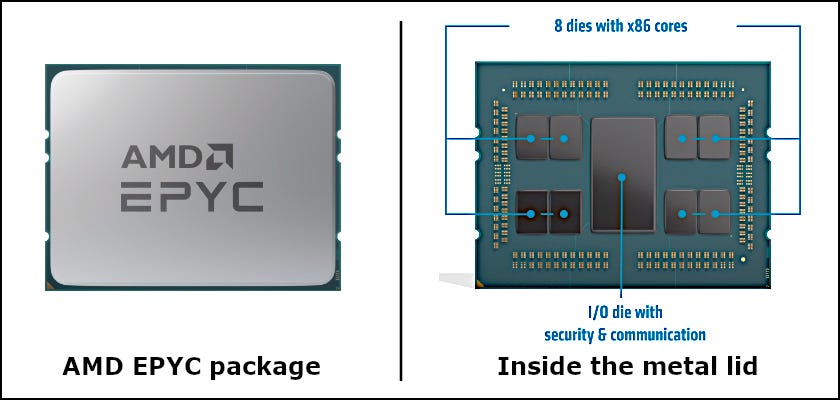

In 2019, AMD launched its Zen 2 architecture, introducing chiplet-based designs in the Ryzen 3000 series for desktops and EPYC Rome for data centers. These weren’t experimental models. They were high-performance, mass-produced chips sold to millions of customers. AMD split the processor into compute chiplets, known as Core Complex Dies (CCDs), and assigned memory and input/output functions to a separate I/O Die (IOD). No other company had deployed this approach across an entire product line.

The benefits were substantial. Smaller chiplets are easier to manufacture and less prone to defects, leading to better yields and lower production costs. This design also enabled AMD to build larger and more powerful processors. The 96-core EPYC 9654, for instance, uses 12 chiplets in a single package, which would not be possible with a monolithic die.

To make this architecture work, AMD developed Infinity Fabric, a custom interconnect that allows chiplets to communicate with low latency and high efficiency. This system powers AMD’s entire product range, including desktops, laptops, servers, and GPUs.

As a result, EPYC chips quickly gained traction in data centers. They offered more compute power, better energy efficiency, and lower costs, prompting companies like AWS, Microsoft Azure, and Oracle to adopt them. This helped AMD secure a significant share of the server market.

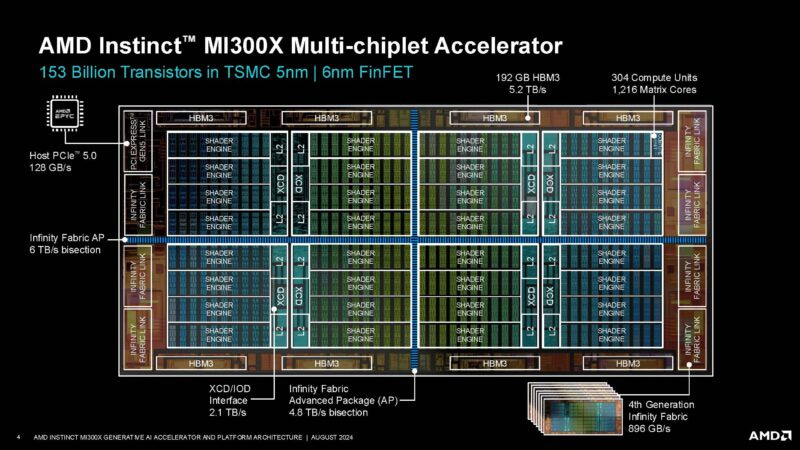

AMD hasn’t stopped there. Its latest AI processors, including the MI200, MI300A, MI300X, and MI325X, integrate CPU chiplets, GPU chiplets, and high-bandwidth memory into one unified system. This allows them to efficiently handle demanding tasks like artificial intelligence and scientific computing. So far, no other company has released anything this advanced using chiplets.

Although Intel and NVIDIA now use chiplets as well, Intel with Sapphire Rapids and NVIDIA with Blackwell, they entered the space much later.

In summary, AMD didn’t just adopt chiplets, it pioneered them at scale. While others are only beginning to follow the same path, AMD’s deep experience and early lead give it a strong advantage in an era where chiplet-based design is critical for building next-generation AI chips.

The Software Shift

NVIDIA’s dominance in AI isn’t just about chips. It built a powerful software moat through CUDA, cuDNN, and deep integration across the AI stack. This tight control over both hardware and software created a closed ecosystem that made switching difficult for developers.

AMD is now closing the gap. Its open-source ROCm platform is gaining traction, offering developers more flexibility and transparency than CUDA ever could. And through key acquisitions and strategic initiatives, AMD is building a competitive software ecosystem of its own.

The Nod.ai Effect and the Rise of Open Source

A major shift came with AMD’s acquisition of Nod.ai, an open-source AI software startup. Its CEO, Anush Elangovan, now leads AMD’s AI software division. Under his leadership, community engagement has surged, and ROCm has become more accessible and robust.

One standout example is AMD’s $100,000 ROCm challenge, where developers compete to achieve the best performance on the MI300X. With over 18,000 submissions, AMD is effectively crowdsourcing high-performance optimizations for the cost of a single mid-level salary. This is the strength of open source, something NVIDIA’s closed system can’t replicate.

Inference: AMD’s Immediate Battlefield

AMD’s AI software team is now heavily focused on inference optimization. The results are already showing. Benchmarks from DeepSeek and AITER reveal impressive gains, and AMD is working closely with Microsoft’s AI team to enhance performance across real-world workloads.

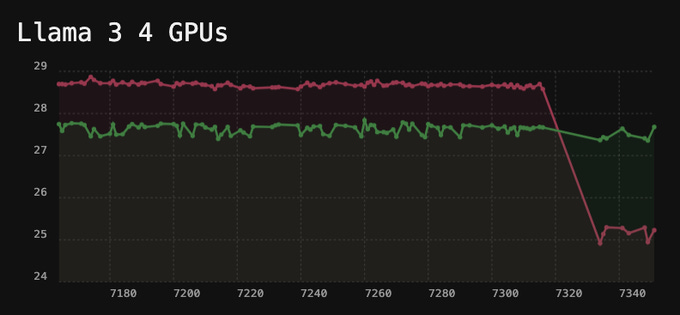

These aren’t just internal claims. Tinycorp, a company building AI workstations, has started offering AMD-based systems. Their engineers achieved faster Llama inference speeds on AMD chips than NVIDIA’s, and, as the cherry on the top, the CEO was so impressed he bought $250,000 in AMD stock.

Internally, AMD plans to double the size of its AI software team every six months, according to Chris Sosa, Director of Engineering at AMD AI Software. And at Computex, ROCm support was extended to Strix Halo and RDNA4 GPUs, a crucial step in aligning software across AMD’s expanding product line.

Beyond ROCm: Silo AI and Mipsology

AMD isn’t stopping at inference or open source. It acquired Silo AI, Europe’s largest AI lab, which specializes in domain-specific models for sectors like manufacturing, healthcare, finance, and public infrastructure. Their focus on sovereign AI, local language models, and on-prem deployment makes them especially relevant in the European market.

Silo AI is now more than a partner, it’s a pillar of AMD’s full-stack strategy, helping it move from just selling silicon to delivering complete AI systems optimized for AMD hardware.

To further extend its reach, AMD also acquired Mipsology, a French company specializing in FPGA-based AI inference. Their platform, Zebra, lets developers run TensorFlow or PyTorch models directly on FPGAs, without rewriting code or retraining models.

This unlocks new use cases in industries like defense, aerospace, and automotive, where power, latency, and long product life cycles matter more than raw compute. Now that AMD owns Xilinx, the FPGA leader, Mipsology fills in the software layer to bring AI to more power-sensitive environments.

Financials

This isn’t just a story about promises and hopes. AMD doesn’t just have plans, they have results.

This is still a high-growth company, even though it's currently missing key products that could expand its TAM by hundreds of billions, and soon, trillions.

Here’s a quick snapshot of last quarter’s year-over-year results:

Revenue: Up 36%

Gross Profit: Up 40%

Operating Income: Up 57%

Net Income: Up 55%

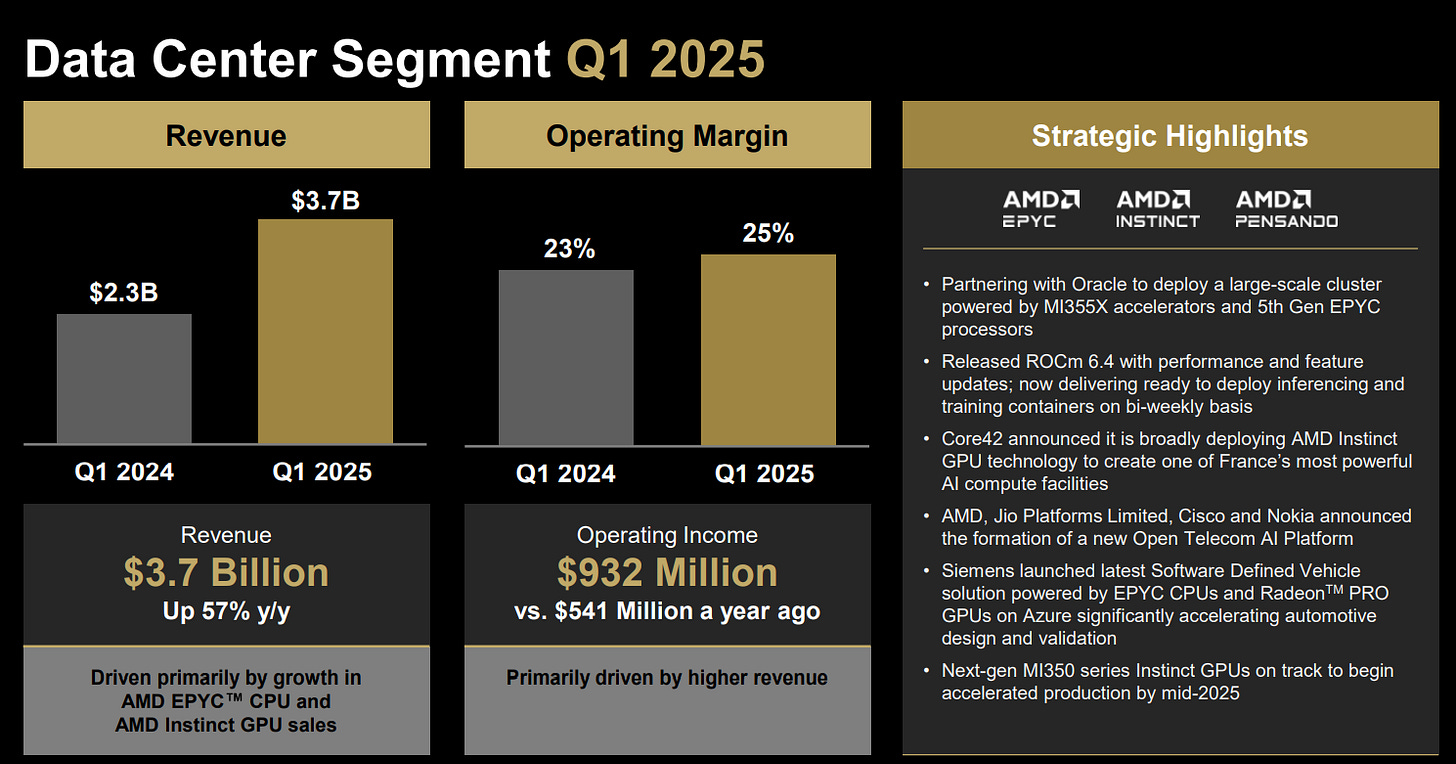

The Data Center segment led the way, growing 57% YoY, with operating margin improving from 23% to 25%.

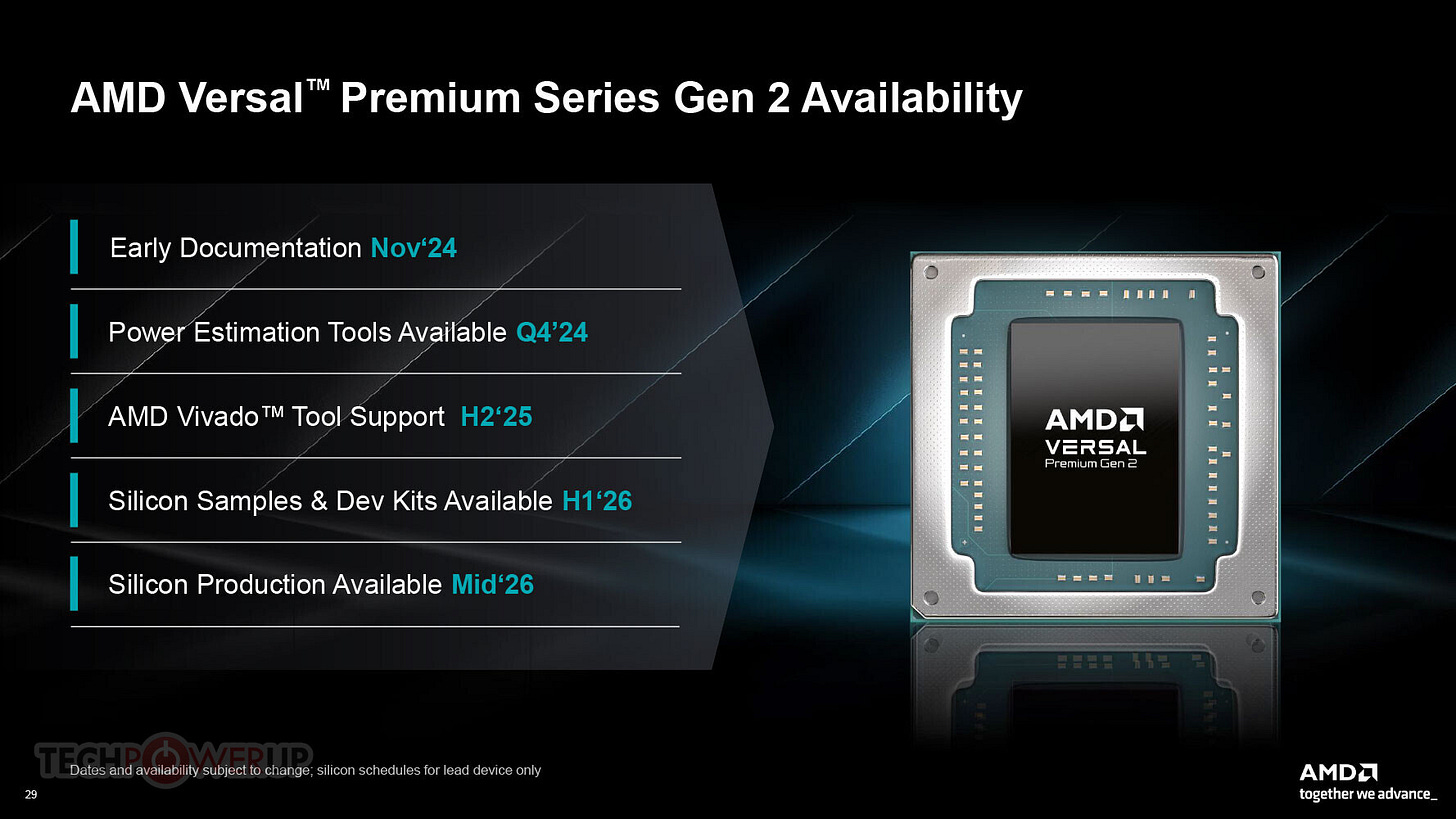

The only segment that held AMD back was Embedded (FPGAs). AMD is currently refreshing its FPGA lineup, and the new products launching this year are expected to turn this drag into a catalyst.

On an annualized basis, AMD is trading at:

6× Sales

25× Operating Income

28× EPS

These numbers are compelling, especially considering the long-term growth of the AI cycle. But even these multiples may overstate the valuation, given that AMD expects significantly stronger results in the second half of the year.

That expectation is based on two key reasons:

The release of their new 355X AI accelerator, featuring a new architecture and vastly improved inference performance, is scheduled for mid-year. In addition to the Oracle and Saudi Arabia deals, client interest is strong following early testing.

Export restrictions will impact Q2 results. However, AMD has reaffirmed guidance in line with Q1. In the second half of the year, this headwind will normalize, allowing growth to resume. AMD is also preparing a new AI chip customized for China, which will reduce the impact of export controls.

Considering all this, AMD is trading at an attractive valuation, one that clearly doesn’t reflect the massive opportunity it holds in the emerging multi-trillion-dollar inference market.

Competitor Comparison

Keep reading with a 7-day free trial

Subscribe to Daniel Romero to keep reading this post and get 7 days of free access to the full post archives.