Astera Labs: Deep Dive

Triple-digit growth, 40%+ FCF margin, and a customer list that includes NVIDIA, AMD, Google, and Amazon

Introduction

Data centers aren’t just about filling racks with powerful CPUs and GPUs.

Without the right connections, those chips are isolated islands of compute. The real bottleneck in scaling AI infrastructure isn’t processing power, it’s how quickly data can move across servers, racks, and clusters.

This is where networking becomes critical. Ultra-low latency and high-bandwidth links allow thousands of accelerators to operate as one massive system.

In this piece, I’m focusing on Astera Labs, a company deeply embedded in the AI data center stack. I’ll cover Coherent Corp., another important player, in a separate article which will be posted later this week.

Astera Labs in AI Infrastructure

Astera Labs isn’t a household name, but it plays a central role in AI data centers. As a fast-growing designer of connectivity silicon, Astera solves bottlenecks between accelerators and memory. Its chips act as traffic controllers and signal amplifiers, making sure data moves efficiently inside the server.

The company is built around short-reach, low-latency connections within the rack. Its Aries retimers extend PCIe and CXL links, while newer products like Scorpio switches and Leo memory controllers are gaining traction across major hyperscalers.

Networking

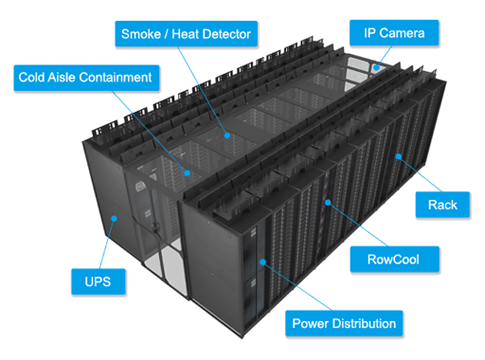

Modern AI data centers are the engine rooms of the digital economy, filled with servers and specialized processors like GPUs. Training a large language model can involve tens of thousands of chips exchanging data simultaneously. This puts enormous pressure on the networking fabric.

Within a rack: servers connect through short copper or optical cables to a top-of-rack switch.

Between racks: optical fibers link clusters and backbone switches across the data hall.

The challenge is that copper connections degrade quickly. Beyond a few meters, they lose efficiency and signal strength, making them unfit for AI’s exploding bandwidth and latency requirements.

This is where Astera comes in.

Their chips sit inside the rack, boosting and cleaning degraded copper signals before they hit the next component. By using retimers and smart cable modules, Astera extends the reach and reliability of copper connections, keeping data flowing fast and clean across dense AI servers.

While optics handle long distances, it’s Astera’s silicon that ensures signal integrity and low latency within the rack, where most of the data movement happens.

Background

Founded in 2017, Astera Labs is a data center connectivity pure-play. Unlike Coherent, which focuses on photonics, Astera specializes in silicon and hardware that extend and manage high-speed links inside servers and across racks.

The company was started by former Texas Instruments engineers who anticipated the rise of heterogeneous computing. In modern data centers, CPUs, GPUs, and accelerators must all work together. That mix creates bottlenecks in the data pipelines, and Astera’s mission is simple: remove bottlenecks wherever they appear, as CEO Jitendra Mohan describes it.

The Product Portfolio

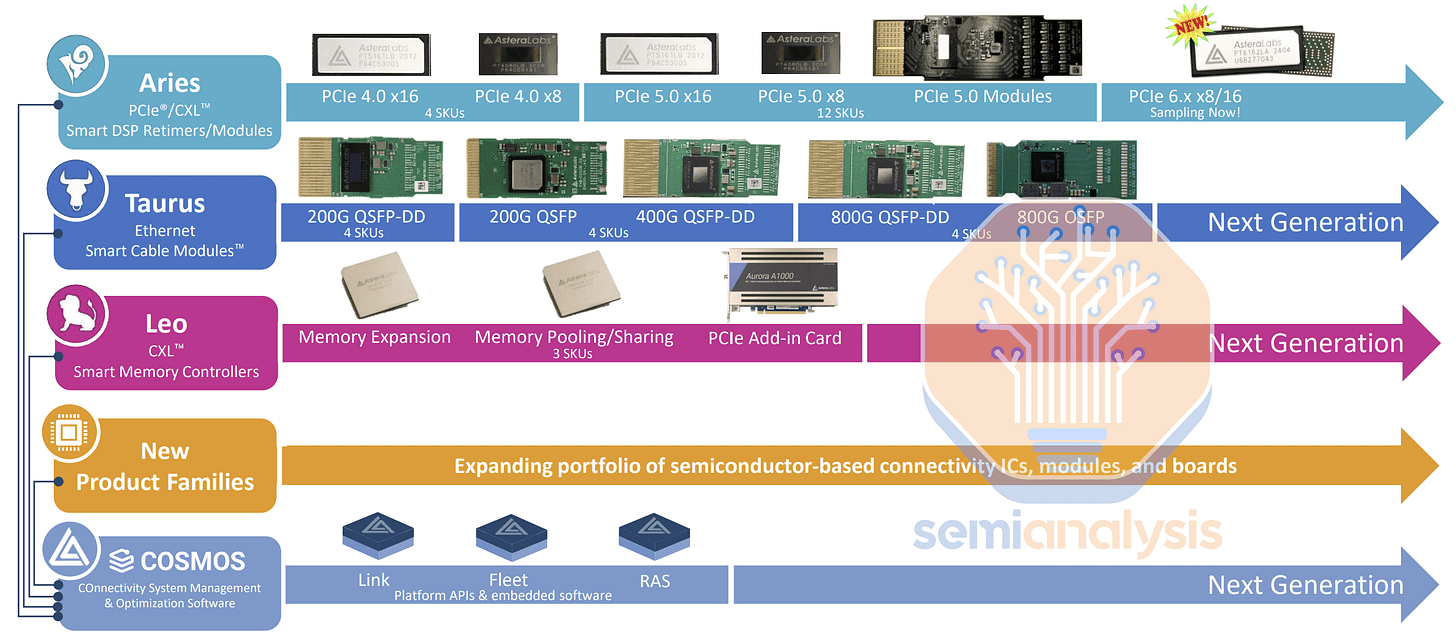

Astera has developed a full stack of silicon and software for rack-scale systems:

Aries: PCIe and CXL retimers that clean and retransmit high-speed signals. Deployed in standalone chips and in smart cables.

Taurus: Ethernet Smart Cable Modules. These boost the performance of copper links between switches and servers.

Leo: CXL memory controllers that expand memory capacity beyond what's physically installed on CPUs and GPUs.

Scorpio: PCIe Gen6 fabric switches that connect GPUs, CPUs, and NICs across racks. positioned as open alternatives to NVIDIA’s NVSwitch and Broadcom’s PCIe switches.

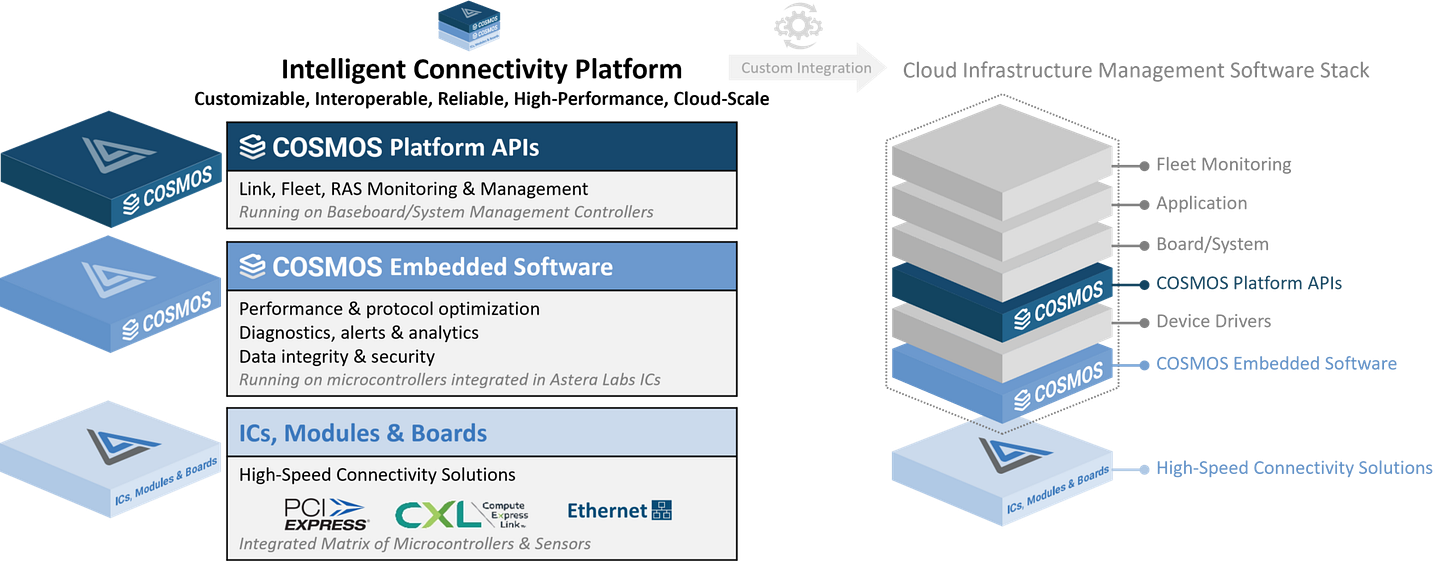

COSMOS: A software suite that monitors, manages, and orchestrates all of Astera’s hardware at the rack level.

Aries: The Booster Behind AI Racks

The core of Astera’s platform is Aries, a family of retimers that recover and retransmit degraded PCIe or CXL signals. These chips extend signal transmission up to seven meters with standard PCIe 5.0 cables.

Why does this matter? In a traditional server, chips sit only inches apart. In a modern AI pod, GPUs are distributed across multiple racks. Aries retimers keep those GPUs connected with minimal latency and low signal loss.

They are already shipping at scale inside Active Electrical Cables (AECs) and as standalone form factors, giving Astera a critical role in building dense and distributed AI clusters.

Beyond Aries: The Expansion Stack

Astera has layered new products on top of Aries to serve broader AI interconnect needs:

Taurus improves Ethernet copper links between servers and switches. Key for top-of-rack and intra-rack communication.

Leo enables memory pooling using CXL 2.0 and 3.0. This is essential for LLMs and other workloads that need far more RAM than one chip can support.

Scorpio brings PCIe switching to GPU clusters. Its Gen6-ready versions allow multiple accelerators to share memory and talk peer-to-peer, without vendor lock-in.

COSMOS software ties it all together, providing telemetry and control across the rack.

Astera’s platform is multi-protocol: PCIe, CXL, Ethernet, NVLink, UALink.

This gives it a unique position in enabling composable infrastructure, where compute and memory are modular and dynamically connected.

Architecture Design Wins

Astera’s growth depends on securing design wins inside the world’s largest AI systems.

Once its chips are designed into a hyperscaler’s architecture, whether NVIDIA’s GPUs, AMD’s rack-scale platforms, or Amazon’s custom silicon, they become part of that infrastructure for years.

This strategy gives Astera high visibility into future demand. Its retimers, fabric switches, and memory controllers are already embedded across major AI platforms. By winning these slots, Astera captures recurring revenue as hyperscalers scale out deployments.

The three most important examples today are NVIDIA Rubin/Blackwell, AMD Instinct MI400 Helios, UA Link, and AWS Trainium/Inferentia.

Each highlights how Astera is positioning itself as the connectivity backbone of next-generation AI data centers.

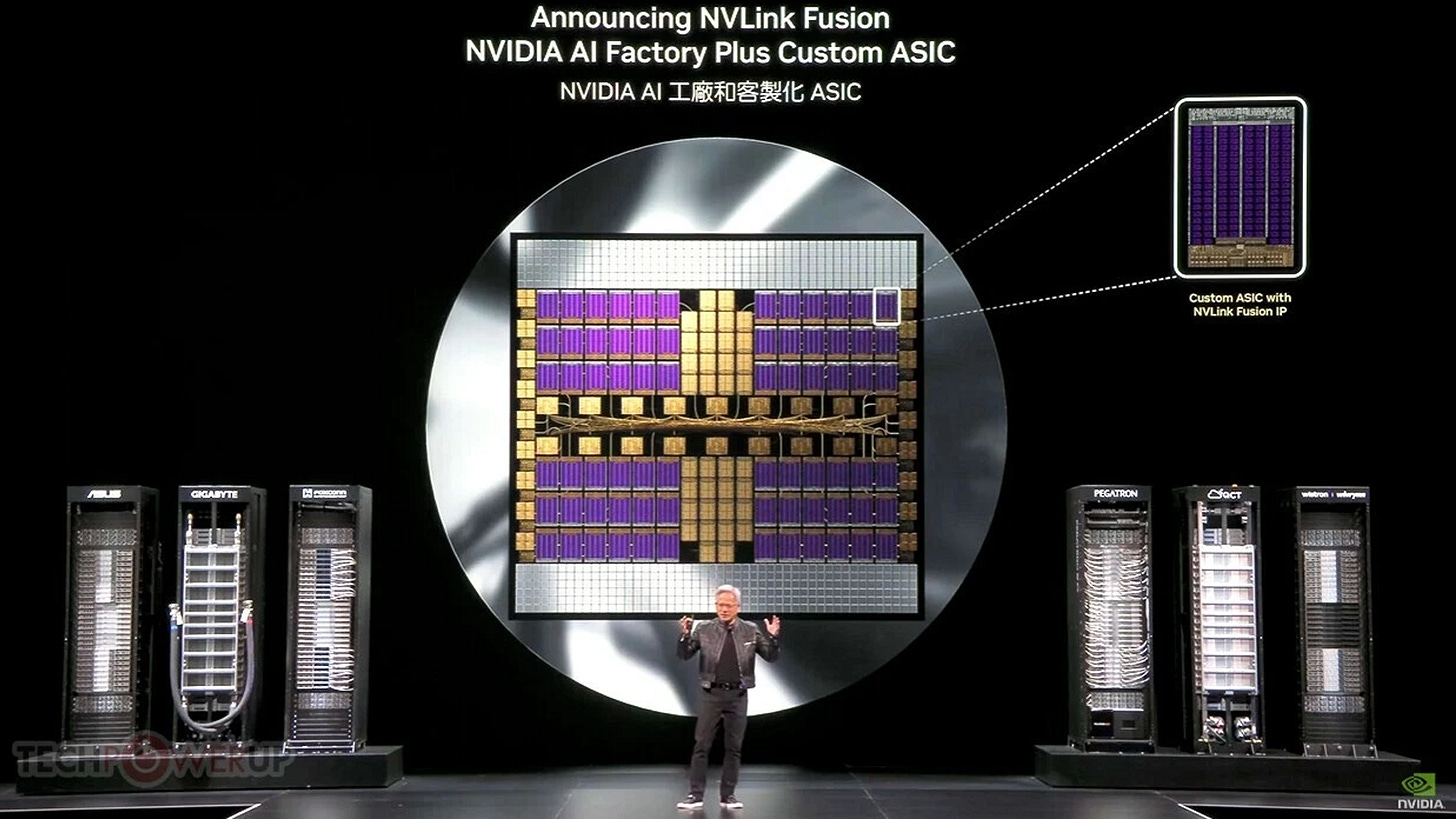

NVIDIA Rubin and NVLink Fusion

Astera has been a key connectivity partner for NVIDIA since the Hopper generation. Its Aries retimers are embedded across NVIDIA’s HGX platforms and have now expanded into Rubin.

With Rubin and NVLink Fusion, NVIDIA is building a new open ecosystem. Astera is directly involved, providing NVLink-capable retimers and switches. At GTC 2025, it demonstrated PCIe 6.0 interoperability with Blackwell GPUs.

Even though Rubin’s GPU-to-GPU links rely on NVSwitch 5.0, PCIe and Ethernet still connect racks and storage. Astera’s components ensure fast, low-latency links across the entire stack.

NVIDIA has acknowledged Aries’ widespread use and called Astera a long-time ecosystem partner. This opens the door for continued content growth in future NVIDIA systems.

AMD Helios and the UALink Open Standard

Astera is also tightly aligned with AMD’s rack-scale architecture.

The new Helios platform will deploy 72 Instinct MI400 GPUs, linked by AMD CPUs and Pensando NICs over an open fabric built on UALink.

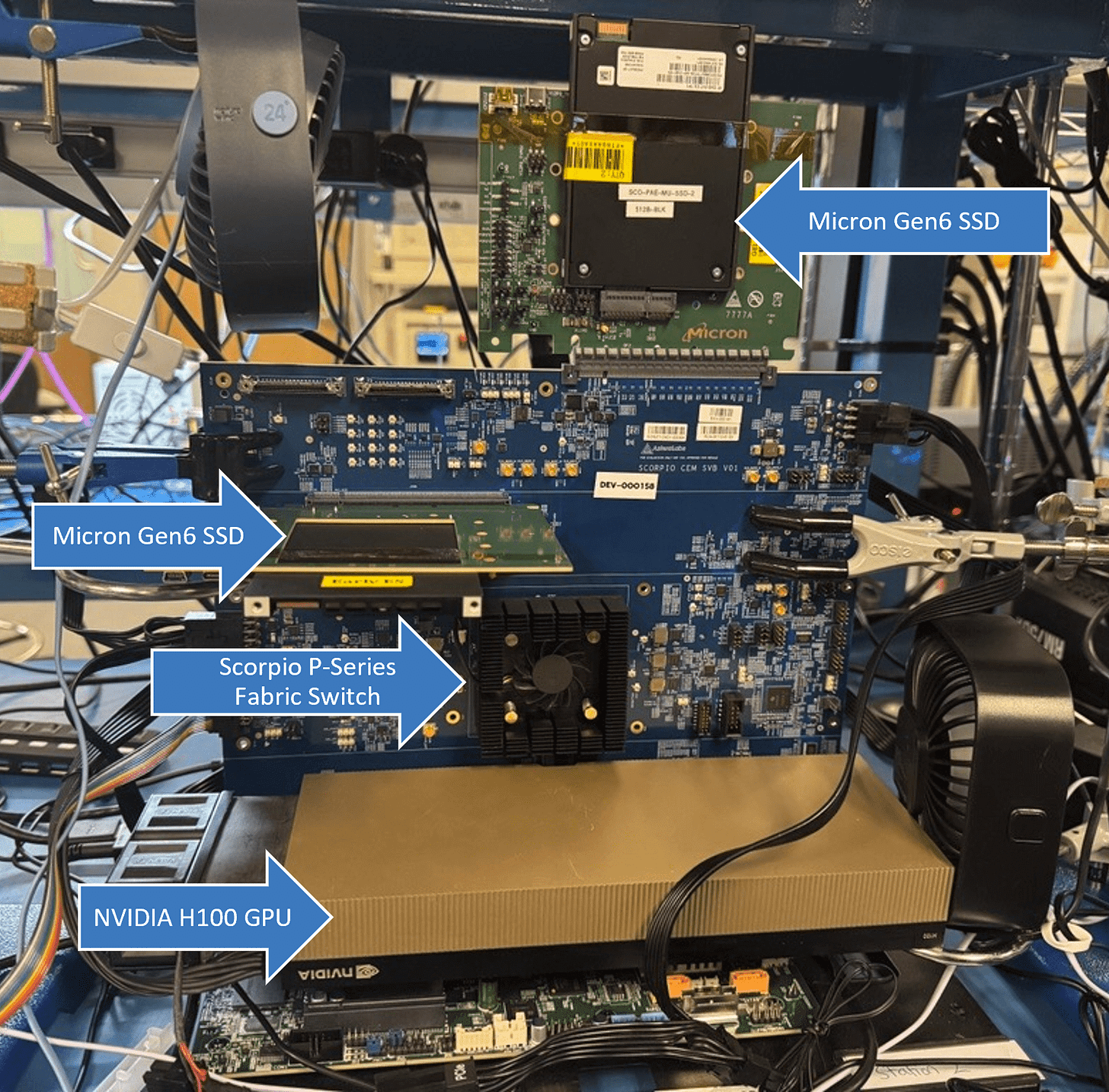

Astera is a founding board member of the UALink Consortium and is contributing silicon to Helios. Its Scorpio-X switches and Aries retimers will interconnect every part of the rack: GPUs, CPUs, NICs, SSDs.

Unlike NVIDIA’s closed NVLink, UALink is open, modular, and optimized for disaggregated GPU clusters. Astera’s support here gives AMD and its cloud customers flexibility and performance.

It also ensures Astera content in every rack built on Helios, one of AMD’s biggest bets in AI.

AWS Trainium and Custom Silicon

Astera is already shipping high volumes of hardware into Amazon’s custom AI racks. Each Trainium 2 chip reportedly uses 128 Astera optical links. These are Active Optical Cables built on PCIe retimers, designed for petabit-scale fabrics.

Astera is also expected to contribute to Trainium 4, possibly providing the I/O chiplets through its partner Alchip.

Amazon is part of the UALink Consortium, and Astera’s presence implies potential wins beyond training, possibly in Inferentia inference racks as well. With hyperscalers doubling down on custom ASICs, Astera is a natural fit to scale alongside them.

Hyperscalers and Alchip Partnership

Astera claims 400+ design wins, many with hyperscalers using custom accelerators or GPUs. It replaced Broadcom on the UALink board, which includes Google, Microsoft, Meta, and Amazon.

To help those customers move faster, Astera partnered with Alchip in 2025 to offer pre-integrated rack solutions: compute ASICs + Astera connectivity. This reduces time-to-market and integration risk.

Astera also works directly with ODMs like Supermicro and Inventec, and testing partners like Keysight. These relationships help Astera secure sockets across the hyperscaler supply chain.

Google TPUs

Although not much information has been disclosed, Google is known to use Gen 5 PCIe Astera retimers in its TPU systems.

Rack-Scale Trends

The market is shifting toward rack-scale, disaggregated AI systems.

Instead of putting everything on one board, hyperscalers now build modular racks with shared memory and compute pools.

That requires massive interconnect bandwidth.

Astera has built a portfolio to cover this:

Leo CXL memory controllers enable 40% lower latency and twice the LLM instances per server.

Scorpio switches and Aries retimers power GPU-to-GPU communication across pods.

A typical 8-server pod with 8 GPUs each might use 16 Scorpio-X switches, dozens of Aries, and 32 Leo controllers, millions in connectivity silicon per pod.

The company is shipping PCIe 6 and CXL 3.0 products today and co-developing next-gen open fabrics like UALink and Ultra Ethernet.

These are the backbones of future AI factories.

Astera’s CEO expects scale-up connectivity to represent a major growth driver.

In the last Q2 call, he stated that rack-scale AI infrastructure alone could add nearly $5 billion in market opportunity by 2030. This would be on top of Astera’s current TAM.

Competitive Position and Outlook

Astera Labs is not just another connectivity chip company. What sets it apart is how broadly integrated it is across fast-growing AI platforms, and how many protocols it supports.

Where competitors like Broadcom or Marvell tend to focus on one protocol (e.g. Ethernet or proprietary fabrics), Astera supports PCIe 6.0, CXL, Ethernet, NVLink, and UALink.

This gives it multiple points of insertion inside an AI rack. One GPU pod might use:

Aries (PCIe retimer) to connect to the CPU

Scorpio-X (UALink switch) to link GPUs

Leo (CXL controller) to tap into pooled memory

This multi-protocol approach makes Astera a plug-and-play backbone for AI servers.

Astera’s software suite, COSMOS, helps manage all this by monitoring link health, stability, and performance across the rack.

Embedded Across the AI Ecosystem

Astera has already secured positions in the most important AI system architectures: NVIDIA Rubin, AMD Helios (MI400), AWS Trainium, and Google TPUs. This alone forms a moat and speaks to the quality of their offerings.

This industry is not just about technicals. You have to value a company by who actually chooses to work with it. In this case, Astera is the preferred pick across the board.

Competitive Landscape

Astera leads in retimers, with its Aries chips first to market for PCIe Gen4/5 and now Gen6. These clean up signals and help transmit them over longer distances. Broadcom and Marvell offer similar Gen6 retimers, but Astera adds fleet-level diagnostics via COSMOS, something competitors lack.

In CXL memory pooling, Astera is alone. No one else sells a standalone memory controller like Leo, which is critical for training large AI models with pooled RAM.

In Ethernet modules, Astera’s Taurus reaches 400Gbps, which is enough for short copper links. But Marvell’s 1.6Tbps DSPs are ahead for long-distance fiber, where copper falls short.

Both Broadcom and Marvell hold a clear advantage in optics and fiber, and there is no workaround for that. Astera’s CEO has been explicit about it.

In switching, Astera’s Scorpio PCIe Gen6 switch has 64 lanes. Broadcom’s upcoming PEX90144 is expected to have over 100 ports, which could outscale Scorpio in large builds.

Differentiator: COSMOS Software

The real standout is COSMOS, Astera’s rack-wide telemetry platform.

It provides real-time data on link quality, error rates, and temperatures, something no competitor offers today. This gives hyperscalers better visibility into their AI clusters and helps reduce downtime.

Market Share

Astera claims to be present in over 80% of AI server platforms. This aligns with major hyperscaler wins: AWS, Microsoft, and Google all use Astera-powered connectivity.

The company also has 90% market share with its Gen5 PCIe Retimers.

Truly a dominant position in an extremely key product offering.

Risks and Challenges

Astera’s current edge is its early start and tight hyperscaler integration. But there are risks:

Broadcom and Marvell will soon ship competing PCIe/CXL chips at larger scale.

NVLink-C2C and other on-board fabrics could reduce PCIe retimer demand inside GPU boards.

CPO (co-packaged optics) and optical chiplets may eventually bypass electrical retimers entirely.

If hyperscaler capex slows, Astera’s growth will decelerate.

Still, Astera is preparing for this. It’s already showing PCIe and CXL over optics, and it remains part of UALink and Ultra Ethernet Consortiums, ensuring it’s present in future rack architectures.

Financials

Growth

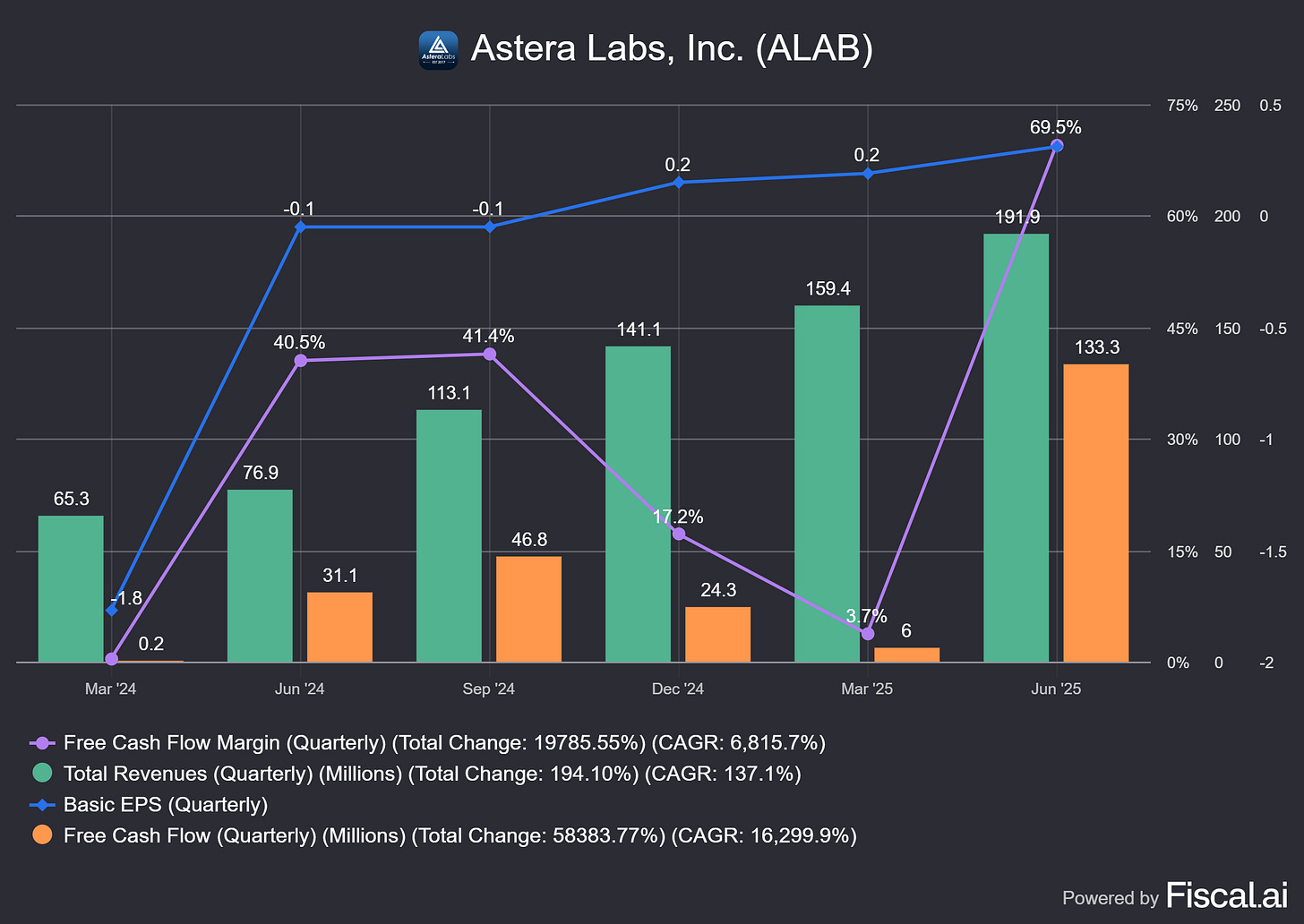

Astera has a history of pure hypergrowth:

2021: $34.8M revenue

2023: $116M revenue

2024: $396.3M revenue, up 242% year-over-year

H1 2025: $351M revenue, up 147% from H1 2024

Margins

Margins are exceptional:

Gross margin: 76.4% in 2024, ~75% in H1 2025

High margins reflect the value and scarcity of its solutions

Profitability has arrived faster than expected:

2024: Net loss of $83.4M due to IPO stock compensation

H1 2025: Net income of $83M, showing strong operating leverage

Astera is investing heavily in R&D ($131M in H1 2025, or 37% of revenue), but revenue growth is outpacing costs, driving margin expansion.

Balance Sheet

The balance sheet is strong:

Cash: $1.06B as of mid-2025

Debt: None

Positive cash flow: $136M in 2024, $146M in H1 2025

This gives Astera plenty of runway for new product launches and scaling. With no debt and over $1B in cash, it is well-positioned to fund growth and weather volatility.

Outlook

Astera’s opportunity is tied to the scaling of AI clusters, which are undergoing massive growth. For Q3 2025, Astera expects:

Revenue between $203M and $210M

Non-GAAP gross margin of ~75%

Non-GAAP operating expenses between $76M and $80M

Non-GAAP tax rate of ~20%

Non-GAAP diluted EPS of $0.38 to $0.39, based on ~180M diluted shares outstanding

Will Optics Disrupt Astera’s Business?

Copper: Astera’s foundation

Astera Labs built its business around short-reach connectivity inside AI servers. Most CPU, GPU, and switch links run only a few meters. Here, copper wins on cost, reliability, and latency.

At current speeds, copper links often need DSP retimers to go beyond one meter. That is Astera’s Aries line, extending PCIe and CXL reach and stability across AI servers.

This remains common practice in hyperscale data centers.

Optics: extending the reach

Once links stretch beyond about five to ten meters, fiber optics becomes the practical choice. That is how large clusters span multiple racks or rows.

Astera has already demonstrated PCIe and CXL over fiber. The company now offers both Active Electrical Cables over copper and Active Optical Cables over fiber, with COSMOS software managing either medium.

In short, Astera is embracing optics, not resisting it.

Where each technology fits

Copper with retimers: under five meters, lowest cost and lowest latency

Optical cables: beyond five to ten meters, connecting racks or rows

Coherent optics: long campus links across buildings

Result: coexistence. Copper dominates inside the rack, optics connects racks and rows, coherent links buildings.

The next frontier: co-packaged optics

The industry is moving toward co-packaged optics and silicon photonics, placing photonic I/O close to the networking or compute silicon.

Switches come first. Vendors are rolling out high-density switches with co-packaged optics to cut power per port and improve signal integrity.

Servers come later. Optical technology is not yet ready for broad use inside GPUs and general server I/O, which points to a gradual rollout.

Intel and others like NVIDIA have shown optical I/O chiplets, proving the long-term path even if costs still need to come down.

Will optics take some of Astera’s market share?

Yes. The first impact is at the switch layer, where co-packaged optics displaces some electrical paths and pluggables.

Over time, optical I/O on servers and accelerators could reduce certain copper cable and retimer uses. The shift is multi-year, not overnight.

Astera’s strategy: media-agnostic

Astera positions itself as media-agnostic.

Its retimers, Scorpio fabric switches, and COSMOS management software aim to control and monitor links over copper and fiber.

Important clarification: Astera does not build optics technology.

It sells PCIe-over-optics endpoints and the COSMOS control plane, so copper and fiber behave like one managed fabric. The company is also building a scale-up fabric roadmap aligned with PCIe 6 and the emerging UALink topology.

What likely stays with copper for longer

Very short, latency-sensitive links on boards and inside racks where cost and simplicity matter

Rising attach rates for retimers with PCIe 6 and PCIe 7 as higher speeds increase signal loss

Where optics gains first

High-density switch ports using co-packaged optics to cut power per port

Rack-to-rack and row-to-row links as clusters spread out. Astera can sell into this with optical endpoints and COSMOS

Longer-term factors

Broad adoption of optical I/O on CPUs and GPUs, which could compress some copper-retimer volumes

Timing depends on cost, reliability, and manufacturing maturity at scale

Risk Scenarios

Base case, two to four years

Co-packaged optics ramps at switches. Copper plus DSP remains entrenched inside servers. Astera grows with AI server volumes and higher PCIe attach rates, and begins to mix in PCIe-over-optics endpoints and Scorpio fabric revenue.

Upside case

Astera wins more optical endpoints, expands scale-up fabrics in GPU nodes, and lifts COSMOS software attach. Content per rack rises even as optics expands.

Downside case, five years and beyond

Rapid server-side optical I/O adoption compresses copper-retimer use sooner than expected. Astera must shift more mix to optical endpoints, fabric silicon, and software to offset the decline.

Final takeaway

Optics will gain share, first at the switch and for rack-to-rack spans. Copper plus retimers remains critical inside the rack as PCIe 6 and PCIe 7 roll out.

Astera already extends PCIe and CXL over fiber and manages both media in COSMOS, so it can participate in the optical path rather than lose it.

The real risk is timing and execution.

If optical I/O moves into servers and accelerators sooner than expected, Astera will need to accelerate its mix of optical endpoints, fabric, and software.

Near term, the growth driver is still short-reach PCIe with retimers.

Over time, success depends on how well Astera rides the optics wave, not whether optics exists.

Valuation

Keep reading with a 7-day free trial

Subscribe to Daniel Romero to keep reading this post and get 7 days of free access to the full post archives.

![News] Amazon Reportedly Slashes Prices on Trainium-powered AI Servers to Take on NVIDIA News] Amazon Reportedly Slashes Prices on Trainium-powered AI Servers to Take on NVIDIA](https://substackcdn.com/image/fetch/$s_!oCY-!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F63e59cfc-29b4-4902-a1dd-1cb570b3842c_624x351.jpeg)